SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsIn this blog post, we discussed the various methods for accessing and exporting Tenant and Subscription logs from Microsoft Azure.

So far in our blog post series on cloud log extraction, we have looked at extracting logs from AWS, Google Cloud, and Google Workspace. In the fourth installment of this series, we’ll be looking at how we can view and extract logs from Microsoft Azure.

In Azure, there are several sources of logs providing various information about your Azure tenant and its resources. Tenant logs (sign-in and audit logs) and Subscription logs (activity logs) are enabled by default. These logs can be accessed via the following methods, depending on the configuration:

Resource, Operating System, and Application logs also exist but are off by default and will not be discussed in this blog post, although many of the concepts discussed will apply to the export methods for these log types too. Furthermore, configuring the log sources’ export settings is outside the scope of this blog post. We recommend reviewing Microsoft’s documentation on logging and diagnostic settings for more information, or check out SANS FOR509: Enterprise Cloud Forensics and Incident Response, in which we discuss this topic in detail. This blog post will focus on how these various access methods can be leveraged once the configuration is in place.

The Azure Portal provides a quick and easy method of reviewing the Tenant and Subscription logs. Unfortunately, it will only provide logs from the last 30 days for Tenant logs (for enterprise customers) and the last 90 days for Subscription logs, so depending on your use case it may provide limited value. We’ll review how to view logs from both of these sources in the portal. The portal can be accessed via the following link: https://portal.azure.com/.

Starting with Tenant logs, within the Azure Portal, search for Azure Activity Directory and go to that service. On the navigation menu on the left, under Monitoring, there are two different log types that can be accessed from this view, Sign-in Logs and Audit Logs.

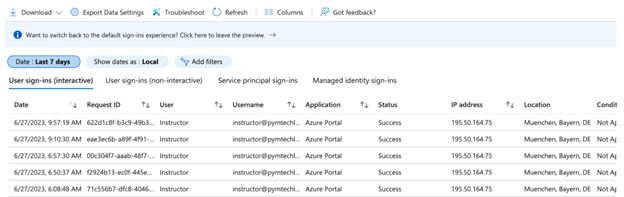

If accessing the sign-in logs, you’ll see a view similar to the one below:

From here, you can apply filters based on any of the fields available. Each of the tabs underneath the filter provide access to a list of sign-ins based on the type. Clicking on any of the rows will open a pane to the right containing all the details recorded for the event. If you wish to review outside of the portal, you can use the Download option at the top and export up to 100,000 rows per login type in CSV or JSON format. Alternatively, you can use the Export Data Settings to configure the logs to automatically forward to a Log Analytics Workspace, a storage account, or EventHub.

In a similar fashion, accessing the Audit logs will provide a table, filters, and download and export options, as show below:

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blta86ed2daef913872/64a6f04bbd5d8aeb6372169e/Picture2.pngThe only difference here is that the download option will allow up to 250,000 events instead of 100,000 events.

The second log source available in the portal for viewing is the Subscription logs (more frequently referred to as activity logs). To view these logs, search for Activity log and access the service. You’ll once again be provided with a formatted table with filters available to search through the last 90 days of logs. Activity logs can only be downloaded in CSV format, not JSON.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt8c6d9db7a829cb52/64a6f04b4d81431f388ff344/Picture3.pngA difference in how these events are represented versus the tenant logs is that each operation is made up of a series of events, as can be seen when expanding one of the operations.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/bltab9510115f95c845/64a6f04b9b4571a52354d3ae/Picture4.pngThis creates a challenge when trying to review the details of a large number of events. If desired, this data can be programmatically queried via the Get-Azlog PowerShell cmdlet or the az monitor activity-log CLI command.

The next few methods discussed in this post are only relevant if configured via diagnostic settings in the Export Data Settings or Export Activity Logs options on the aforementioned views. We’ll start by looking at the Log Analytics Workspace.

One benefit of the Log Analytics Workspace is that we can send all of our logs, including resource, operating system, and application logs (if configured), to the same workspace, creating a central location for log access. To access your workspace, go to the same services mentioned above for Tenant or Subscription logs, but now select Log Analytics from the Monitoring section of the navigation pane (alternatively, you can search for Log Analytics Workspace in the Azure search bar). This will open up a view to run queries against the logs forwarded to that workspace, as shown in the screenshot below.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt22787dc6c0d85098/64a6f04b05df4316f55aeab4/Picture5.pngOn the left, under LogManagement we can see the logs available for querying based on diagnostic setting configurations. In this case, we have access to audit logs, sign-in logs, and activity logs, plus some more. At the top of the page we can execute a query in Kusto Query Language (KQL). If you need some ideas for queries that can be run, simply select the Queries tab from the top of the left pane and choose a pre-built query to populate the search field with, then select Run.

Another export destination provided when configuring diagnostic settings is storage accounts. This method is great for being able to retain the logs for an extended period of time and export to a tool of your choice as needed. You’ll be charged for the data stored but can specify the retention period that meets your needs and budget. Once the logs are in the storage account, you can access them via API or via Azure Storage Explorer.

Inside the storage account, a JSON file will be created for each hour of activity with a directory structure indicating the date and hour that the log is related to. If you know the exact hour in which the activity you are looking for is, then you can go to that associated file and review. However, if you are trying to review activity across an extended time frame, this is not as simple. To combine these logs into a single file, we can use the following steps:

find <path to logs> -type f –name PT1H.json -exec cat {} + | tee <JSON filename>

This process will need to be repeated from the directories for each of the different types of sign-in logs.

We’re not going to go into depth on the usage of Event Hub in this blog post, but we will briefly mention it as a possibility for Event Hub is a service that provides real-time ingestion capable of processing significant amounts of data. Once data is in Event Hub, it can be accessed and read in a couple of ways. First, it can be integrated with supported third-party SIEMs, such as ArcSight, Splunk, or IBM QRadar. Second, its API can be used to develop custom tooling. To read more about leveraging Event Hub, view Microsoft’s documentation here: https://learn.microsoft.com/en-us/azure/active-directory/reports-monitoring/tutorial-azure-monitor-stream-logs-to-event-hub.

The final method of retrieving logs worth mentioning is the Graph API. The Graph API has an endpoint for Azure AD activity reports that will provide access to the Tenant logs, both sign-in and audit logs. The documentation for this API endpoint can be found here: https://learn.microsoft.com/en-us/graph/api/resources/azure-ad-auditlog-overview?view=graph-rest-1.0

Unlike the aforementioned methods, this method is not configured or accessed directly via the portal and will require the user to develop custom code using one of the supported languages to perform the export as desired. This could be leveraged to simply download the logs to a file locally or to build an integration for a SIEM.

Invictus IR and FOR509 course instructor Korstiaan Stamm have released an open-source tool called Microsoft Extractor Suite. As described in its documentation, this tool “is a fully-featured, actively-maintained, Powershell tool designed to streamline the process of collecting all necessary data and information from various sources within Microsoft.” It is able to extract the Azure AD sign-in logs and audit logs discussed in this article. It also has capabilities to extract the unified audit log (UAL), which we’ll discuss in the next blog post in this series. For more information on this project, check out the following links:

This tool makes extracting logs much easier for those log sources supported by the tool, and it is definitely worth checking out as a tool to integrate into your processes.

In this blog post, we discussed the various methods for accessing and exporting Tenant and Subscription logs from Microsoft Azure. The quickest and simplest method, most useful for reviewing logs from within a recent time frame, is to access them via the Azure Portal directly. For longer retention times, diagnostic settings should be configured to export the logs to either a Log Analytics workspace, EventHub, or a Storage Account. Each of these destinations has strengths and weaknesses so it primarily depends on your use case and how you wish to use the data. Finally, if you wish to develop custom tooling, the logs are accessible via the Graph API. To close off this blog series, the next post will look at how we can export the Unified Audit Log (UAL) from Microsoft 365.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt7681227400dbbb3b/64de3c584eb700227af7e9b4/FOR509_banner.png

Megan is a Senior Security Engineer at Datadog, SANS DFIR faculty, and co-author of FOR509. She holds two master’s degrees, serves as CFO of Mental Health Hackers, and is a strong advocate for hands-on cloud forensics training and mental wellness.

Read more about Megan Roddie-Fonseca