Recently I have been involved with the analysis of a number of rogue web sites linked to a fast flux network. Tracking websites is hard enough, but the process to analyse the flash code and other scripts has been a head-ache in the past. There are a number of tools that can be used (mostly commercial, though there are some on the OWASP site that are open). The issue being that few of these help to filter the content of the code.

In small cases, this is not an issue. Decompiling flash when there is only one or two files to verify is easy. The problem comes when you have several hundred (or more sites) with a variety of code samples — some good, some bad and no easy way to determine which is which.

In the past this has been a process of decompiling all of the samples (where a hash cannot be used to show that the files are the same) and reviewing the code one by one.

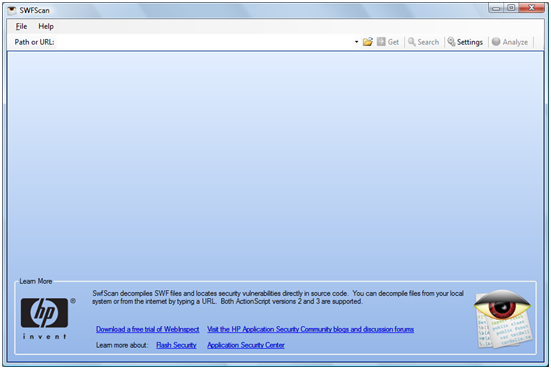

HP has helped with this process.

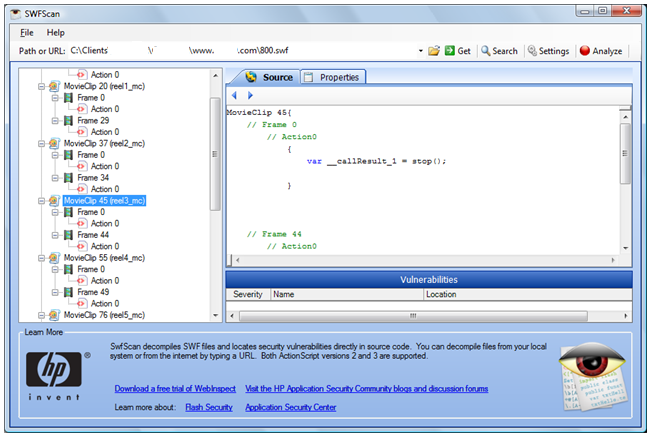

SwfScan not only decompiles the code, but it also does a check for a number of known Flash vulnerabilities and exploits. This means that it is possible to filter the volume of manual testing that is required.

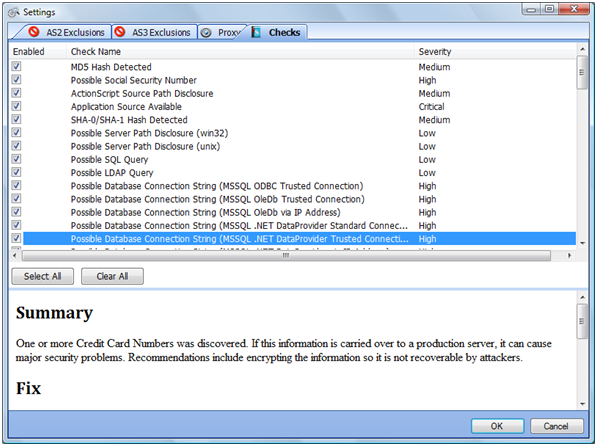

The checks are fairly extensive covering most of the common Flash exploits. The program will also work against a .SWF file saved to disk or it will go out to the Internet and pull the file (given a URL).

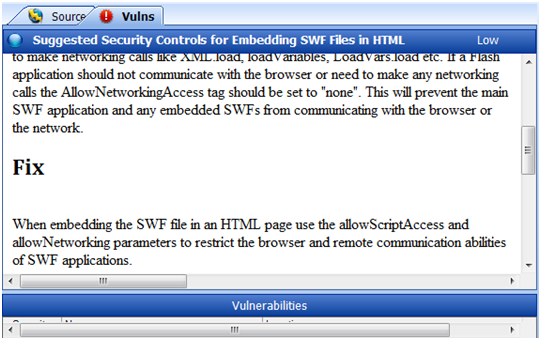

Any vulnerabilities found are displayed and the code and any URLs are exportable for more detailed checking if an issue is noted.

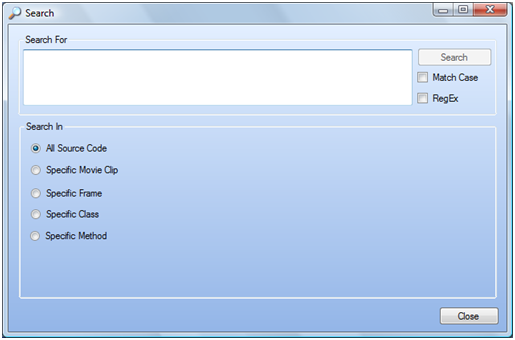

You can also issue your own RegEx searches.

If a vulnerability is noted in the SWF, a fix and links to explain the issue are displayed.

The only issue I have found is that there is no command line functionality. This is not a huge issue as Windows does allow for the scripting of Macros. Doing this you can use a list of sites and have the program run, scan and export before closing and moving to the next site. It is not the most efficient way to do things, but another alternative is to capture the flash files using a spider app.

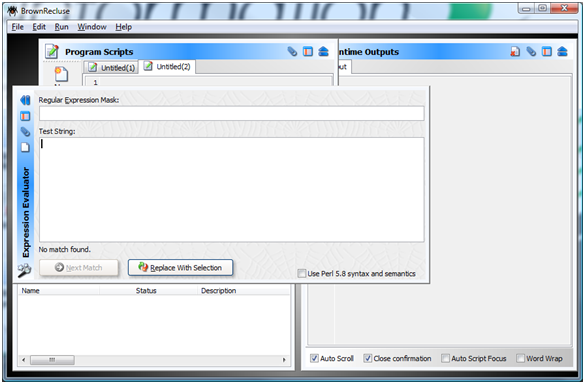

I use WGet and Brown Widow personally (as this is a programmable spider), but the choice is up to the individual.

It is far easier to sort the known good code and remove this first then to have to sift through huge volumes of unknowns.

Craig Wright is a Director with Information Defense in Australia. He holds both the GSE-Malware and GSE-Compliance certifications from GIAC. He is a perpetual student with numerous post graduate degrees including an LLM specializing in international commercial law and ecommerce law as well as working on his 4th IT focused Masters degree (Masters in System Development) from Charles Stuart University where he is helping to launch a Masters degree in digital forensics. He is starting his second doctorate, a PhD on the quantification of information system risk at CSU in April this year.