SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsBeyond ChatGPT and using OpenAI API Q&A

OpenAI - OpenAI is an AI research and deployment company with the mission of ensuring that artificial general intelligence benefits all of humanity.

OpenAI API - API is a platform created by OpenAI that offers their latest models and guides for safety best practices.

ChatGPT - ChatGPT is an artificial intelligence (AI) language model developed by OpenAI that can generate human-like language and carry on conversations with users.

In the era of rapidly advancing technology, the security community is constantly seeking new and innovative ways to safeguard their organizations. The recent WebCast session, Beyond ChatGPT, Building Security Applications using OpenAI API, emphasized the need to shift focus from only using ChatGPT to building security applications with AI models. It also presented a sample application that uses OpenAI to search AWS logs. This blog serves as a follow-up to the session and delves deeper into the technical details of building the application. Throughout this blog, we aim to provide insights and answers to questions raised during the webcast. If you missed the webcast live, you can register to watch it here.

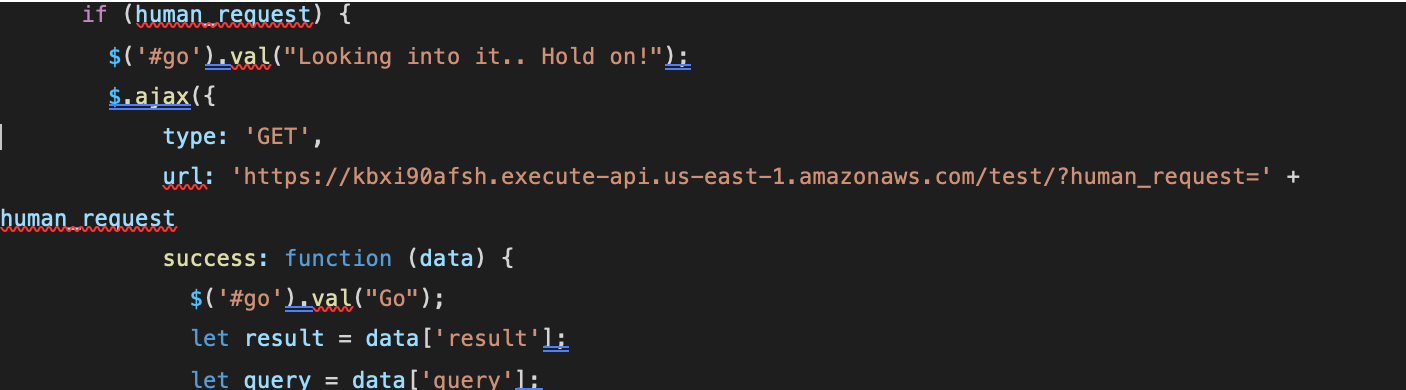

The application's user interface (Hosted on https://cyberdojo.cloud/cloudwatch-bot.html) is built using HTML and JavaScript and is hosted on a public S3 bucket. The JavaScript code on the page communicates with a backend system that includes an API Gateway and a Lambda function (written in Python).

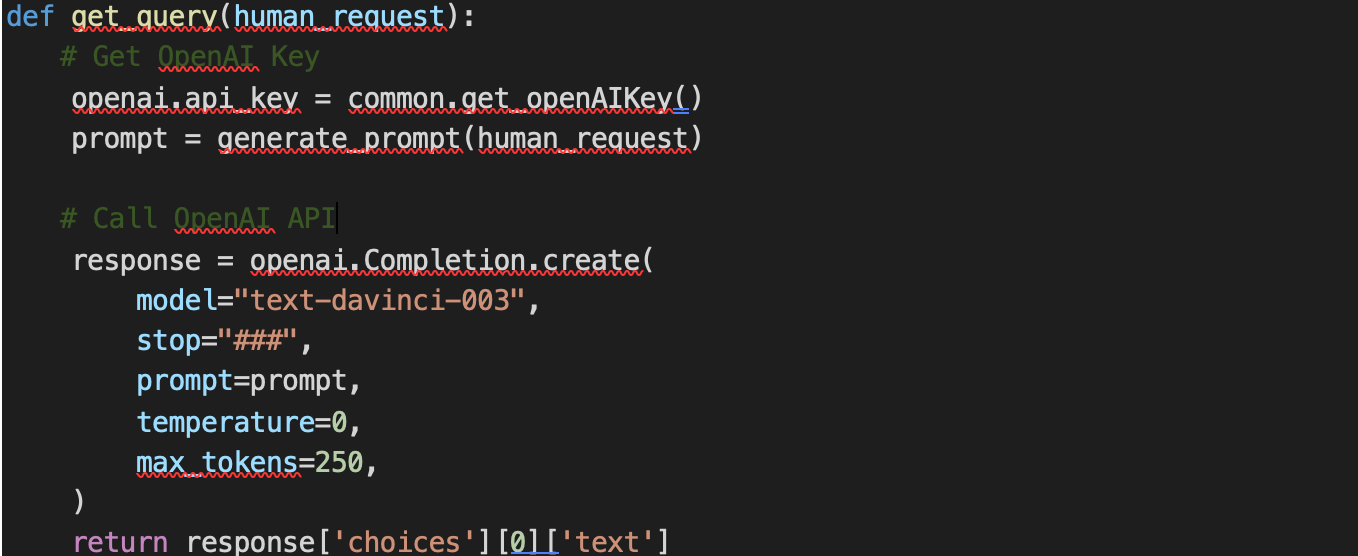

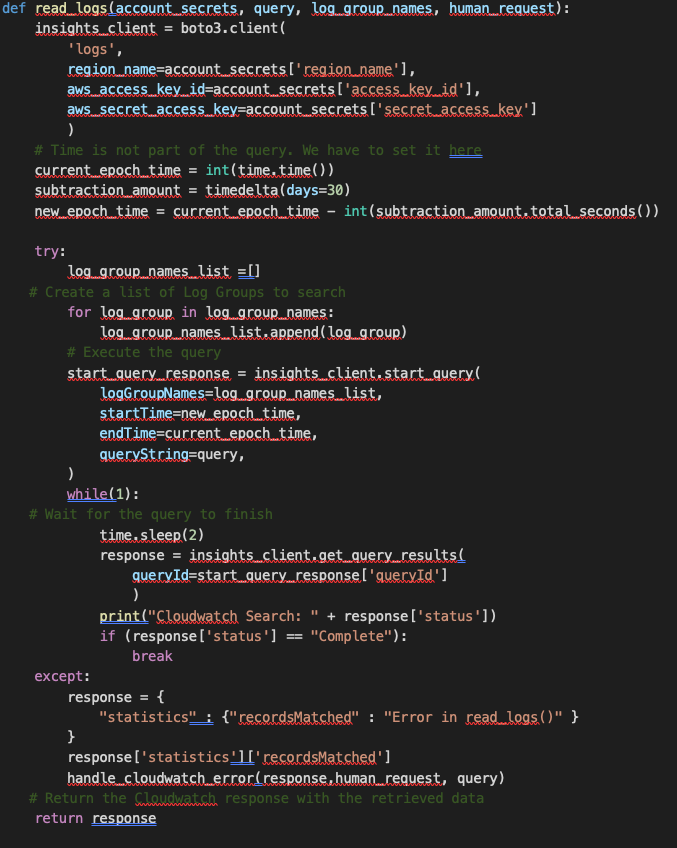

The Lambda function has the necessary permissions to access both OpenAI and CloudWatch. When a user makes a request through the UI, the API Gateway received the request and triggers the Lambda Function. The function then uses OpenAI to translate the request into a CloudWatch query, then uses the query to search CloudWatch logs for the relevant information.

To specify which logs should be searched, the Lambda Function fetches a list of log sources from the SSM Parameter Store. These log sources can be configured as needed to ensure that the correct data is being searched.

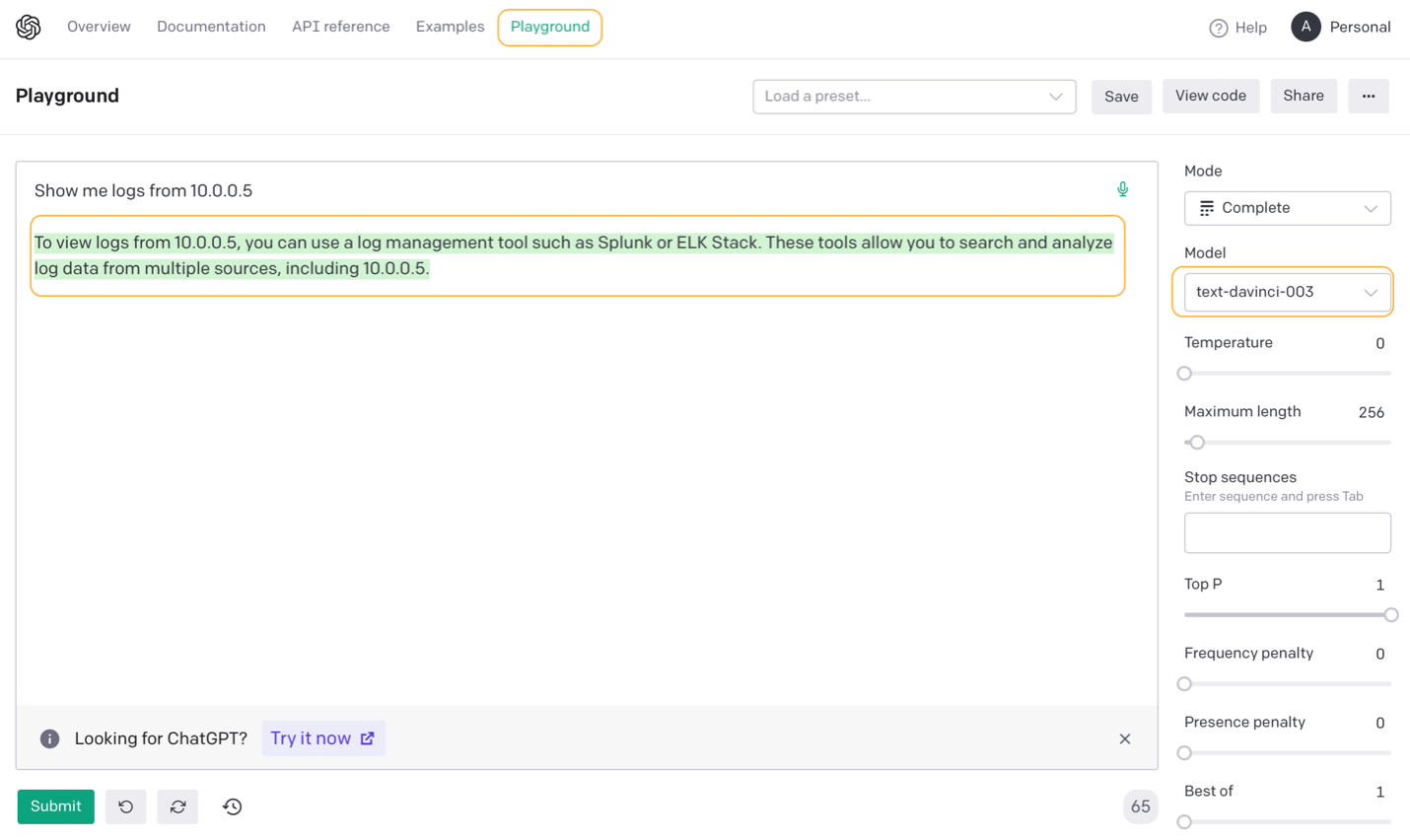

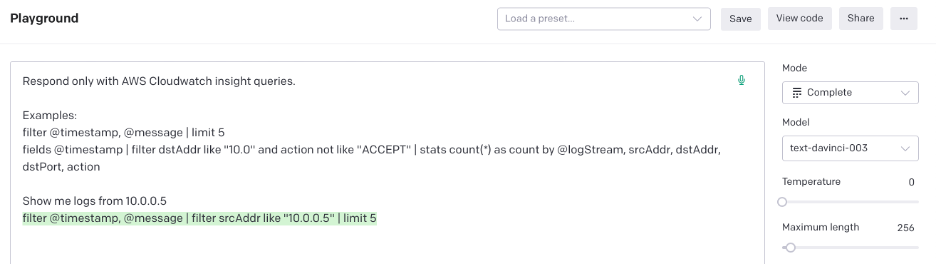

Although OpenAI's models are very powerful, they are also very general. If we use a model without any tuning, the response we get may not be suitable for our needs. The following shows text-davinci-003 being tested without any tuning:

One way to tune the model is through fine-tuning (https://platform.openai.com/docs/guides/fine-tuning), which involves building hundreds of sample prompts and completions, uploading them to OpenAI, and creating a tuned model. However, this method requires a large number of examples.

More on fine-tunning can be found here : https://github.com/Ahmed-AG/Cloudwatch-bot

Instead of using fine-tuning, we have chosen a different method. We will provide “instructions” along with a few examples with the prompt itself. For example, our prompt includes instructions and a question, along with a few Cloudwatch insight queries as examples. Every time a human request is submitted, the instructions text is concatenated with the human request and submitted to the OpenAI model. This approach produces a precise Cloud Insight query as the completion that we can later use in our code.

Here is an example of submitting instructions along with the question:

The downside to this approach is that it can be expensive due to the large number of tokens being sent with every request. A new model called ChatGPT API (gpt-3.5-turbo) has been released after the Webcast, which is 10 times cheaper than text-davinci-003.

Indeed, the ChatGPT API and GPT4 are designed to maintain context, but to achieve that, we need to send the complete chat history. There are also other libraries available such as LangChain, which can be used to chain events together. For instance, we can use it to find IP addresses that have connected to a particular host, then run a whois search, and finally look for other systems that these IP addresses have communicated with. This allows for a more comprehensive and streamlined approach to automatically investigate an incident.

We will learn more about this as more research and products are being worked on.

Yes, as a matter of fact, we have masked the AWS account number and a few other fields in the code. This was done statically, but it's also possible to implement a more dynamic approach.

We have seen many examples of users asking ChatGPT to create malicious files and asking questions about how to use certain tools, etc. This would be the most basic use. But it is not inconceivable that in the near future we could have AI-based Malwares. For example, malware could use AI to craft phishing emails and get initial access, then it might use AI to automatically determine its next steps, scanning the environment, looking for vulnerabilities, pivoting to other systems, etc. Essentially, it would do what a human hacker or pentester would do. Only much faster.

In this particular scenario, the application doesn't have access to the log groups where query logs are stored. They are stored in a separate AWS Account. Therefore, any malicious actor, including an APT, would need to adopt a different approach to gain access to these logs.

Yes, a simplified version is published here: https://github.com/Ahmed-AG/Cloudwatch-bot

Ahmed is the founder of Cyberdojo with 17+ years in cloud, network, and application security. He specializes in GenAI security and has led projects in cloud security, application security, and incident response.

Read more about Ahmed Abugharbia