SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact Us

This post, like the webcast, is in collaboration with the amazing Detection Engineer lead at SCYTHE, Christopher Peacock.

Have you ever received a new cyber threat intelligence report and wondered if you could catch that adversary? Perhaps you’ve conducted an assessment (pen test, red team, or purple team) that’s left you wanting greater detection coverage? In any instance, intelligence should drive detection engineering to ensure coverage of real-world threats targeting your organization.

Every environment is unique and needs custom detections tailored to the environment and its threats. Purple teaming shows us, with a data-driven degree of confidence, what it would look like if a threat were to attack. Adversary emulation allows Blue Teams and Detection Engineers to identify alerting gaps, audit data sources, and develop detections around common questions like, “is it normal for the targeted process to behave that way in our environment?”

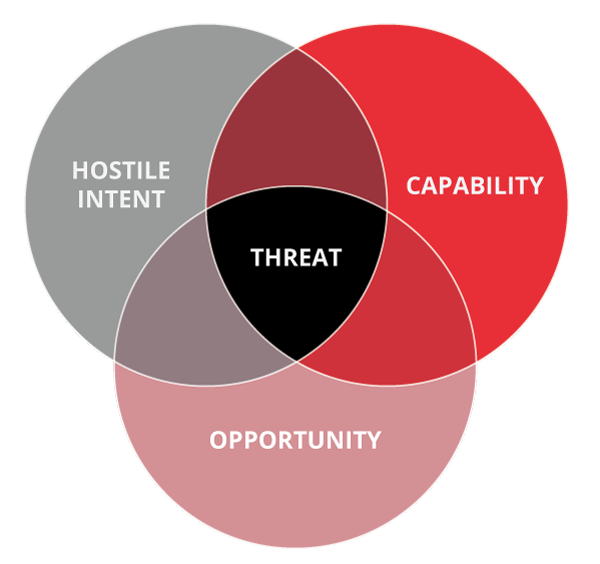

To be threat-informed, we must first understand what a threat is, which allows us to focus on threats applicable to our environment. So, what is a threat? A threat is the intersection of intent, capability, and opportunity.

Identifying who may have the intent to target the organization is crucial. Threat actors' intent varies, but some examples are for-profit cybercrime, corporate espionage, impacting a supply chain, or hacktivism. In addition, to achieve their intent, a threat attempts to impact one or more components of the CIA Triad (Confidentiality, Integrity, & Availability). Who intends to target an organization and why varies from organization to organization. Ranking intent for each unique organization can help drive prioritization.

Furthermore, as a threat’s capabilities increase, so does the danger to the organization. Reviewing the level of an adversary's tooling, training, talent, and supply chain can help determine how capable the adversary is. For instance, if an actor can procure undisclosed exploits, this could increase their capabilities. For a deeper understanding of this and how to prioritize threats for an organization, it's recommended to study Andy Piazza’s Quantifying Threat Actors with Threat Box post.

.png)

Andy Piazza Threat Box Example

The last piece in the components of a threat is opportunity. Opportunity is an area an organization can control. Organizations can reduce opportunity by implementing controls, such as patching, network segmentation, and application control. The greater the controls, the lower the opportunity presented to the adversary. Inversely, fewer controls offer greater opportunity to threat actors. This is considered the low-hanging fruit component.

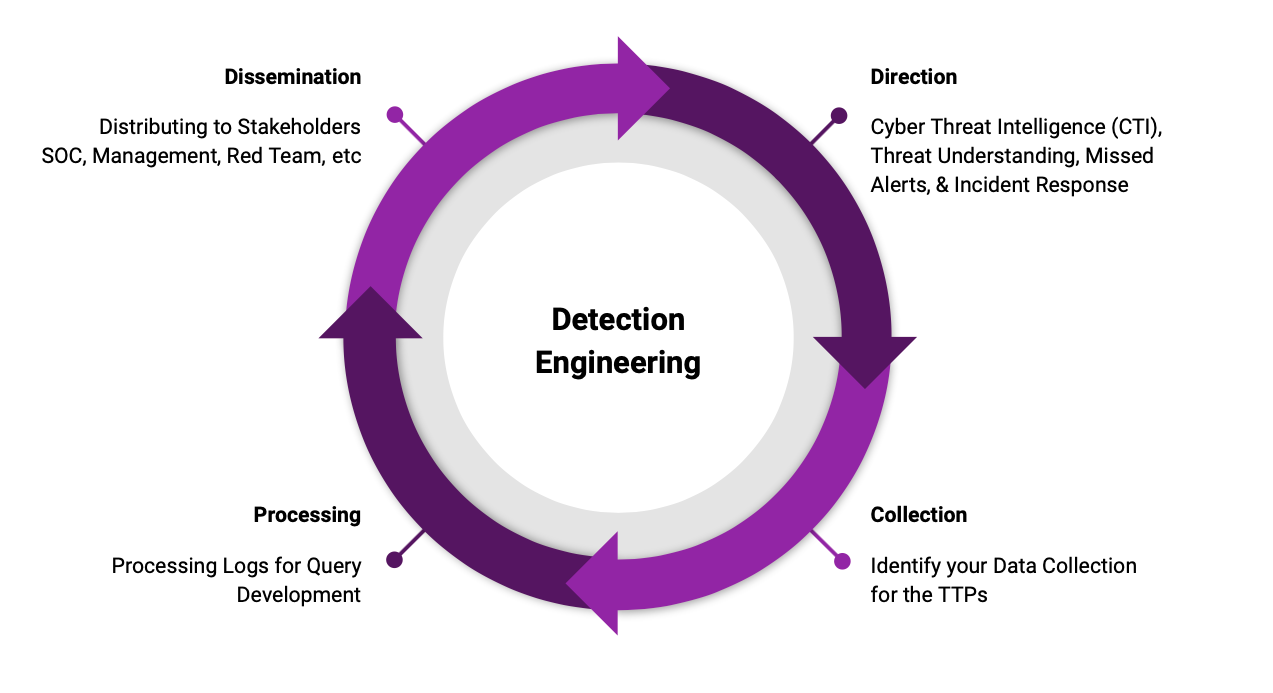

Once there is an understanding of the adversaries who pose a threat to the organization, cyber threat intelligence can gather, process, and disseminate procedure-level information to a red team. The red team then develops an emulation plan for the purple team engagement. After the emulation is conducted, detection gaps are identified by analyzing what procedures do not result in an alert. It is here that we begin the process of threat-informed detection engineering.

As previously mentioned, the direction should come from what threats an organization faces. This can come from cyber threat intelligence, purple team engagements, reviewing incidents, or email sandbox samples. Do note that the detection cycle is circular to account for feedback such as alert verification, alert bypass, false-positive tuning, and procedural variance testing. The takeaway here is that organizations should focus detection resources on their relevant threats.

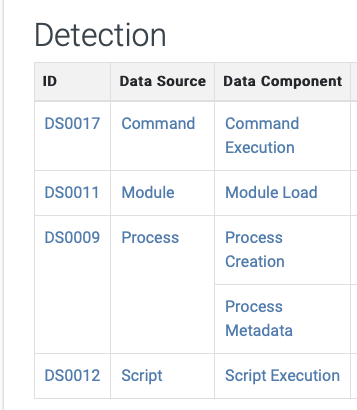

The collection is where a detection engineer starts to understand what data is generated by each procedure conducted. There are multiple ways of doing this, it could be searching through logs related to the Process ID(s) used in the purple team engagement. Alternatively, it may be analyzing what data should be present by researching the Data Components listed in the MITRE ATT&CK Techniques such as the sample below for Command and Scripting Interpreter: PowerShell T1059.001. If at any point collection gaps are identified, they should be documented.

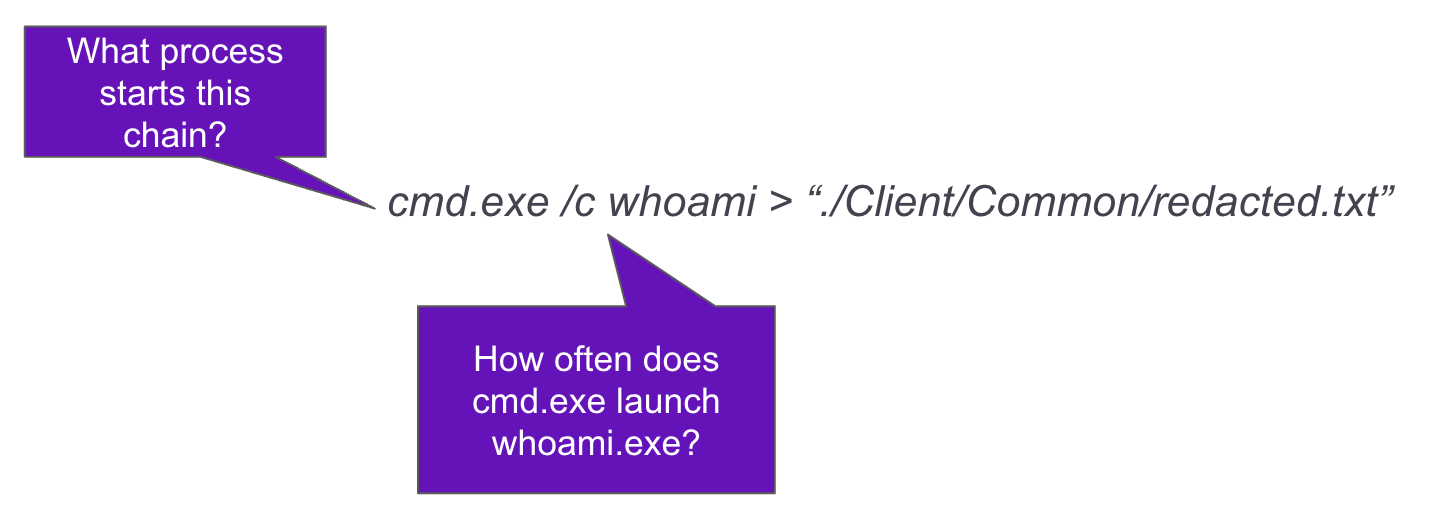

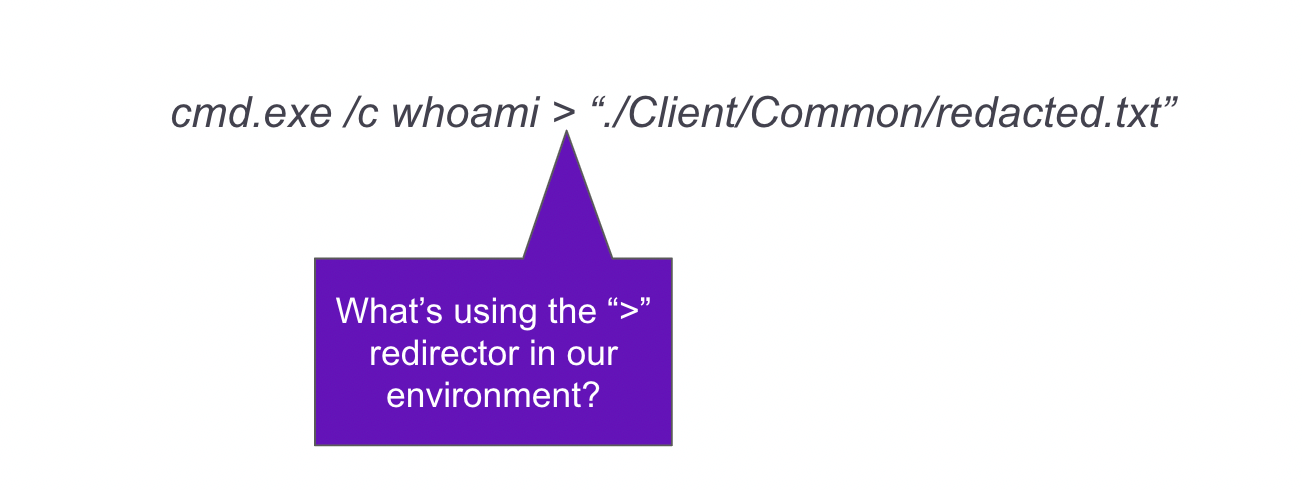

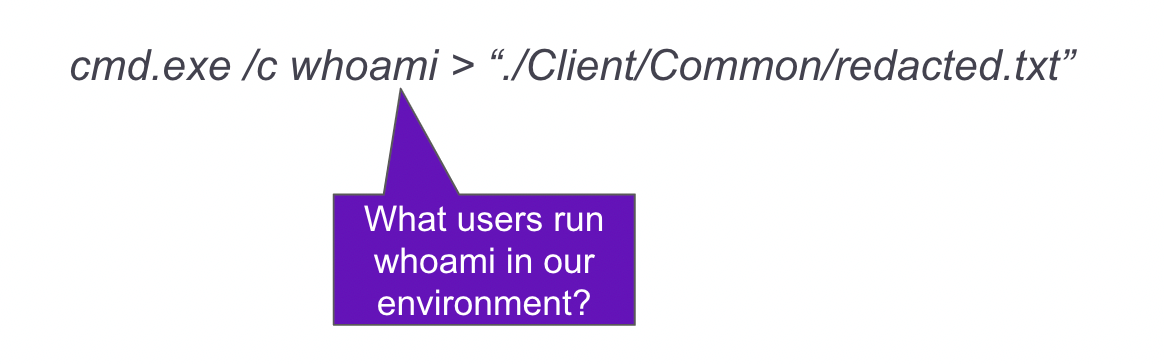

Once the collection has been verified processing can begin. In processing, it’s best to start by hypothesizing a broad search to identify the activity in the procedure. If there are limited false positives the search may be deployed as is in an alert. Oftentimes, tuning is necessary, and some common processing questions can help with tuning. In our processing questions we’ll be using the following procedure example:

cmd.exe /c whoami > “./Client/Common/redacted.txt”The first part of processing is to understand the components of the procedure and how the actor is leveraging them to achieve their goal. Following the example given, we see cmd.exe conducting the command whoami to enumerate the current user and output the results to a text file using the greater-than command parameter. Once an understanding is made a few more questions can help hypothesize searches to detect the activity. A common first question is how often do these components appear normally in the environment? If whoami is rarely used in the environment, its usage can be set to alert. Is it common in the environment for whoami’s output to be redirected to a text file? To help get more granular further tuning questions can be asked such as are there parent processes that can be tuned out or into?

Another question is, are there users to tune out or into? Perhaps the process is only run by users who are developers and can be flagged if executed by non-developers.

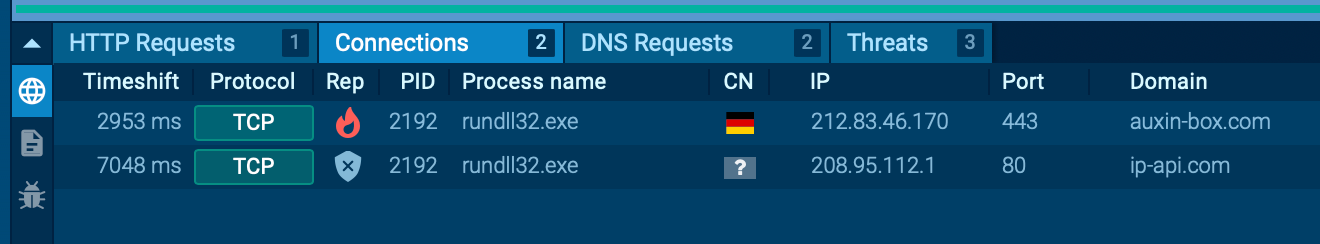

https://app.any.run/tasks/6360b059-b623-45fd-b219-d585e9201418/

Delivering to stakeholders is the last step in the process. It’s here that finalized alerts are made actionable and submitted for testing. The most common deliverable is an alert to be implemented, including documentation such as context, reasoning, and potential responses for the analyst working the alerts. Other deliverables include metrics to manage or cyber threat intelligence to record content to applicable ATT&CK Technique IDs, log sources, or security solutions. Finally, the alert logic should be tested, including testing the alert, the response to the alert, and potential bypasses. If the organization doesn’t have an Alert and Detection Framework, it’s recommended to review Palantir’s ADS Framework and implement the components that fit an organization’s unique resources, requirements, and workflows.

Detection Engineering is a critical process in Purple Team Exercises and Operationalized Purple Teaming. Without improvements to people, process, and technology, assessments are less valuable. We want to test, measure, and improve our overall security in the most efficient way possible, this is why we Purple Team. Detection Engineering is the part where we improve and can then show the improvement. We want to detect everything, but have to start with detecting the most relevant threat that will affect our organization. Being threat-informed is a Cyber Threat Intelligence process that is also part of Purple Teaming. We hope you found this valuable and stay tuned for the next post in our series where we start going through use cases. If this topic interests you, take formal SANS courses that cover detection engineering, like SEC699: Purple Team Tactics - Adversary Emulation for Breach Prevention & Detection!

Jorge Orchilles currently serves as a Senior Director at Verizon, where he leads the Readiness and Proactive Security Team. His team specializes in Exposure & Vulnerability Management, Penetration Testing, Red Team, Purple Team, and AI Red Team.

Read more about Jorge Orchilles