In the three previous installments of this series, I introduced security intelligence and how to begin thinking about sophisticated intrusions. In this entry, I will discuss how my team at Lockheed Martin defines the adversaries that we track using the definitions covered previously, with a particular focus on the kill chain. As always, credit for these techniques belongs to my team and the hard work of evolutionary CND we've done over the past 6 years.

The Campaign

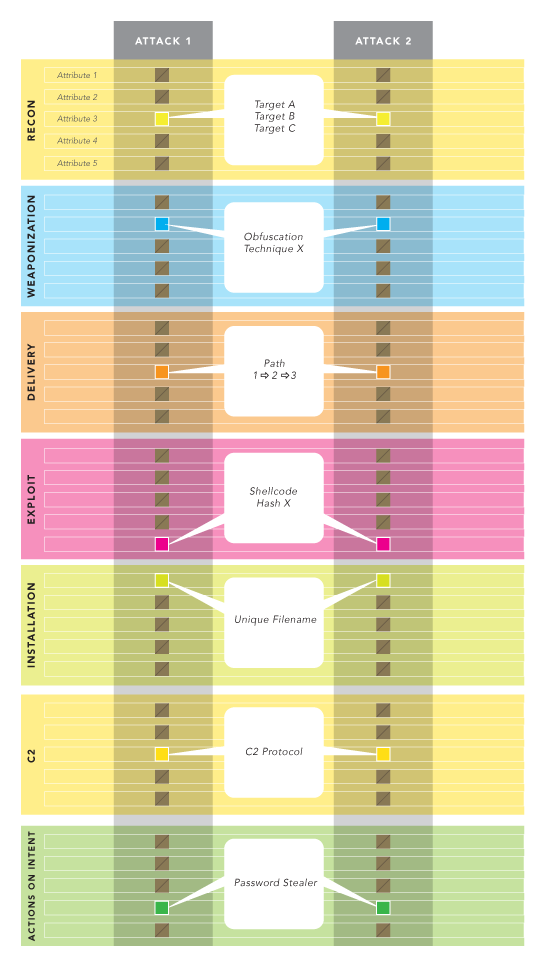

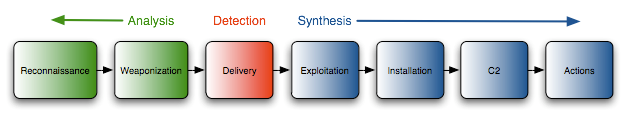

A single intrusion, as we have already discussed, can be modeled as 7 phases. Within each of these phases of an intrusion is a highly-dimensional set of indicators - computer scientists would call them "attributes" - that together uniquely define that intrusion. For example, a C2 callback domain is an indicator attribute, talktome.bad.com is the corresponding value of the indicator. The targeting used (reconnaissance), the way in which the malicious payload is obscured (weaponization), the path the payload takes (delivery), the way the payload is invoked (exploit), where the backdoor is hidden on the system (installation), the protocol used to call back to the adversary (C2), and habits of the adversary once control is established (actions on intent) are all categorical examples of these indicators. It is up to the analyst to discover the significant or uniquely identifying indicators in an intrusion. In some cases, there are common indicators - for example, the last-hop email relay used to deliver a message will be significant in most like intrusions, excluding webmail. In others, the attributes can be unique and surprising - a piece of metadata, a string in the binary of a backdoor, a predictably-malformed HTTP request to check for connectivity.

Often, attributes cannot be recognized as significant until they are observed more than once. Figure 1 illustrates two different intrusions, with some categorical areas where they might overlap. By identifying these consistencies across multiple intrusions, common indicators begin to stand out.

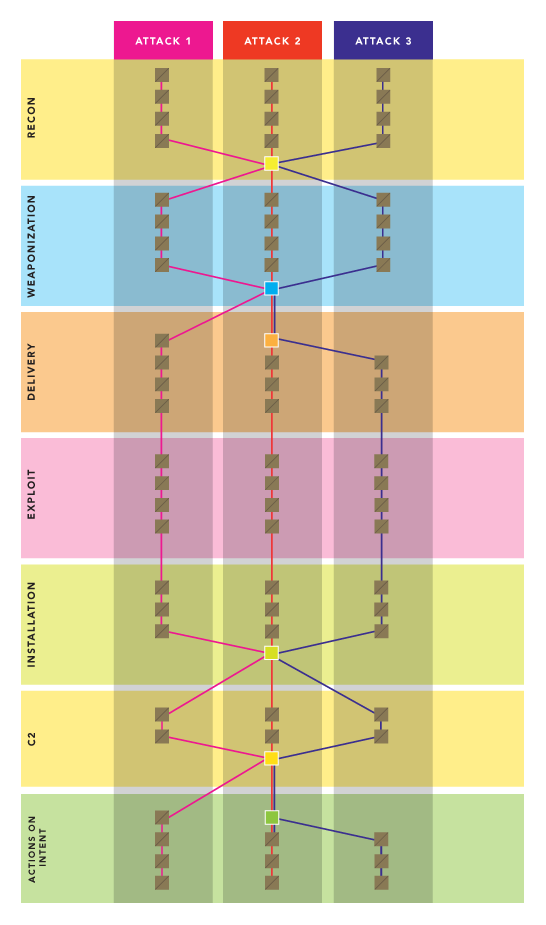

These inflection points, as shown by colored squares in Figure 2, represent the key indicators that, as a set, define an adversary. The more repeatable an indicator, the more critical it is to the adversary - often for reasons that are impossible to divine from data that can be gleaned from defense alone - and the more critical it is to our definition. Really, what we're modeling here isn't an individual, but a linked group of successful and failed intrusions. For that reason, the phrase "campaign" is more appropriate than "adversary," although occasionally we will use them interchangeably.

There is no rule of thumb or objective threshold to inform when linked intrusions should become a campaign. The best measure is results: if a set of indicators effectively predict similar intrusions when observed in the future, then they have probably been selected properly. If the TTPs (Tactics, Techniques, and Procedures) of a campaign associated with a set of indicators are highly disjointed, or they link to benign activity, then the indicators are probably ill-defined or selected. We have found that in cases where three or more intrusion attempts are associated by attributes that are relatively unique, would be difficult for an adversary to change, and exist across multiple phases of the kill chain, it's a good indicator that we might be looking at a single "campaign."

Be aware that adversaries shift tactics over time. A campaign is not static, nor are the key indicators or their corresponding values. We've seen adversaries use the same delivery and C2 infrastructure for years, while others will shift from consistent infrastructure in the Delivery and C2 phases, to highly-variable infrastructure in the delivery phase but consistent targeting and weaponization techniques. Some adversaries will have consistent key indicators, such as tool artifacts in the Delivery and Weaponization phases, but the specific indicator values may change over time. Without constant and complete analysis of sophisticated intrusions, knowledge of campaigns becomes stale and ineffective at predicting future intrusions.

Gathering Intel through Kill Chain Completion

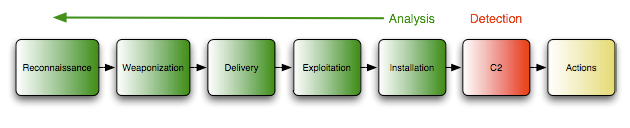

In order to have the data set necessary to link intrusions and identify key indicators, analysts must understand all phases of every sophisticated intrusion. Initial detection of an intrusion may occur at any point across the kill chain. Even if the attack is unsuccessful, detection is just the first step.

Classic incident response methodology assumes a system compromise. In this situation, where a detection happens after the installation and/or execution of malicious code, adversaries have successfully executed many steps in their intrusion. As the intrusion progresses forward in the kill chain, so the corresponding analysis progresses backward (Fig. 3). Analysts must reconstruct every prior stage, necessitating not only the proper tools and infrastructure to do so but also deep network and host forensic skills. Less mature response teams will often get stuck in the delivery to installation phases. Without knowledge of what happened earlier in an intrusion, network defenders will be unable to define campaigns at these earlier phases, and response to intrusions will continue to happen post-compromise as this is where the detections and mitigations are. When walls are hit in analysis that prevent reconstruction of the entire chain, these barriers represent areas for improvement in instrumentation or analytical techniques. Where tools do not already exist for accurate and timely reconstruction, development opportunities exist. Here is but one area where having developers on staff to support incident responders is critical to the success of the organization.

As response organizations mature and are able to more fully build profiles of intrusion campaigns against them, they become more successful at detection prior to compromise. However, just as a post-compromise response involves a significant amount of analysis, the unsuccessful intrusion attempts matching APT campaign characteristics also require investigation. The phases executed successfully by the adversary must still be reconstructed, and the phases that were not must be synthesized to the best ability of the responders (Fig. 4). This aspect is critical to identifying any TTP (Tactic, Technique, Procedure) change that may have resulted from a successful compromise. Perhaps the most attention-grabbing example is identification of 0-day exploits used by an APT actor at the Delivery phase, before the exploit is invoked.

Synthesis clearly demonstrates the criticality of malware reverse engineering skills. It is likely that the backdoor that would have been dropped, even if it is of a known family, using a known C2 protocol, also contains new indicators further defining the infrastructure at the disposal of adversaries. Examples include indicators such as C2 callback IP addresses and fully-qualified domain names. Perhaps minor changes in the malicious code would produce new unique hashes, or a minor version difference results in a slightly different installation filename that could be unique. While anti-virus is typically a bad example of detection in the context of APT intrusions, there are times when it can be of value for older variants of code. For instance, how many reading this analyze emails that are detected by their perimeter anti-virus system? If the detection is for a particular backdoor uniquely linked to an APT campaign, the email could contain valuable indicators about the adversary's delivery or C2 infrastructure that might be re-used later in an intrusion that your anti-virus system does not detect.

Conclusion

Detecting campaigns enables resilient detection and prevention mechanisms across an intrusion, and engages CND responders earlier in the kill chain, reducing the number of successful intrusions. It should be obvious, but bears repeating that a lack of specific indicators from a single intrusion prevents identification of key indicators from sequential intrusions. A lack of key indicators results in an inability to define adversaries, and an inability to define adversaries leaves network defenders responding post-compromise to every intrusion. In short, inability to reconstruct intrusions should be considered an organizational failure of CND, and intelligence-based detections prior to system compromise a success. Defining campaigns, as demonstrated here, is one effective way to facilitates success.

Michael is a senior member of Lockheed Martin's Computer Incident Response Team. He has lectured for various audiences including SANS, IEEE, the annual DC3 CyberCrime Convention, and teaches an introductory class on cryptography. His current work consists of security intelligence analysis and development of new tools and techniques for incident response. Michael holds a BS in computer engineering, an MS in computer science, has earned GCIA (#592) and GCFA (#711) gold certifications alongside various others, and is a professional member of ACM and IEEE.