SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsWeb scraping is a powerful and essential capability for cybercrime intelligence professionals.

This blog is jointly authored by Apurv Singh Gautam, Sr. Threat Research Analyst at Cyble, and Sean O’Connor, co-author of the SANS FOR589TM: Cybercrime IntelligenceTM course.

In the rapidly evolving cybercrime landscape, staying ahead of malicious actors requires a proactive approach to gathering and analyzing data. One of the most powerful tools in the arsenal of cybercrime intelligence analysts is web scraping.

Web scraping is the process of automatically extracting data from websites and web pages, enabling organizations to collect vast amounts of information quickly and efficiently.

Web scraping plays a crucial role in cybercrime intelligence by enabling analysts to keep a close watch on dark web forums, marketplaces, and other online chat platforms where cybercriminals gather and exchange information. By systematically collecting data from these sources, analysts can unearth valuable insights into emerging threats, vulnerabilities, and malicious actors' tactics, techniques, and procedures (TTPs). These insights can guide decision-making, bolster incident response capabilities, and fortify overall cybersecurity.

In this blog post, we will explore the various aspects of scraping operations on the cybercrime underground. We will delve into the different scraping methods, discuss the key use cases in cybercrime investigations, and examine the challenges of anti-scraping mechanisms and techniques to bypass them. Importantly, we will provide insights into the strategic decision-making process behind scraping operations and highlight the importance of data storage and analysis using tools like Elasticsearch and Kibana.

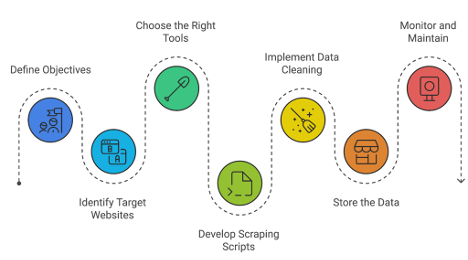

By the end of this post, readers will have a comprehensive understanding of how scraping operations can be leveraged to enhance cybercrime intelligence efforts. This knowledge will empower you, as a cybersecurity intelligence analyst, with the best practices and considerations for implementing effective scraping workflows (see Figure 1). So, let's dive in and uncover the power of scraping in the fight against cybercrime.

Scraping operations involve leveraging different software tools, libraries, and frameworks to extract data programmatically, enabling efficient and scalable information gathering (see Figure 2).

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt778fd86861c724c0/67800a5e1cd05fb8c84afe26/web-scrape-002.pngScraping operations play a crucial role in gathering valuable intelligence for combating cybercrime. By leveraging automated scraping techniques, cybercrime investigators and analysts can collect and analyze vast amounts of data from cybercrime sources. Some of the key use cases of scraping operations on the cybercrime underground include monitoring cybercrime forums and marketplaces, detecting data leaks and breaches, tracking and profiling threat actors, and investigating cybercrime networks and infrastructure (see Figure 3).

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt24cb8e3cb3bd2483/67800a5e27dd6eca1542e4c0/web-scrape-003.pngBy leveraging automated scraping techniques, cybercrime intelligence analysts can gather valuable data, identify trends and patterns, and make informed decisions to prevent, detect, and respond to cyber threats.

Website owners and administrators implement anti-scraping mechanisms to protect their content and prevent unauthorized data collection. These mechanisms pose significant challenges for cybercrime intelligence professionals who rely on scraping to gather valuable data. Some of the common anti-scraping techniques employed by websites include:

To successfully navigate the challenges posed by anti-scraping mechanisms, cybercrime intelligence analysts must employ a range of countermeasures and best practices. These techniques aim to mitigate the risk of detection and ensure the continuity of scraping operations. Some of the strategies to bypass anti-scraping measures and conduct effective scraping for cybercrime intelligence purposes include:

Anti-scraping mechanisms are constantly evolving, and websites may implement new measures to deter scrapers. As cybercrime intelligence professionals, your adaptability is key. You must continuously monitor your scraping operations, detect any changes or disruptions, and adapt your techniques accordingly. Staying up-to-date with the latest scraping technologies, tools, and best practices is essential to maintain effective scraping efforts (see Table 1).

Anti-Scraping Mechanism | Intent | Countermeasure | Impact to Scraping Operations |

CAPTCHAs and human verification challenges | Differentiate human users from automated bots | Utilize CAPTCHA-solving services or incorporate human intervention | Increases the complexity and time required for scraping |

User agent detection and blocking | Identify and block requests from known scraping tools or libraries | Rotate user agent strings and customize headers to mimic legitimate browser requests | Requires additional effort to maintain a pool of diverse user agent strings |

IP address tracking and rate limiting | Detect and block requests from IP addresses making excessive requests | Employ proxy servers and implement IP rotation strategies to distribute requests across multiple IP addresses | Increases the cost and complexity of scraping infrastructure |

Dynamic content rendering and JavaScript obstacles | Prevent scraping of content loaded dynamically through JavaScript | Use headless browsers or tools like Puppeteer or Selenium to render and extract dynamically loaded content | Increases the complexity and computational resources required for scraping |

Fingerprinting and browser profiling | Identify and block automated tools based on unique browser characteristics | Use headless browsers with configurations that closely resemble genuine browser environments and rotate browser profiles | Requires continuous monitoring and adaptation to avoid detection |

Browser automation detection | Identify and block requests from automated browser tools like Selenium or Puppeteer | Minimize the use of automation-specific code and utilize techniques like WebDriver spoofing or undetected Chrome variants | Requires staying updated with the latest detection methods and countermeasures |

CAPTCHA and challenge-response evolution | Prevent automated solving of CAPTCHAs using advanced challenge-response mechanisms | Continuously monitor and integrate the latest CAPTCHA-solving techniques and services | Increases the complexity and cost of handling CAPTCHAs in scraping operations |

Table 1: Anti-Scraping Mechanisms and Countermeasures (Source)

Effective scraping operations require careful planning and strategic decision-making. Several factors must be considered to ensure that scraping efforts are targeted, efficient, and aligned with the overall objectives of the investigation. The key aspects of decision-making when planning and executing scraping tasks for cybercrime intelligence purposes include:

Efficient storage, processing, and analysis of the data collected through scraping operations are crucial for deriving actionable insights in cybercrime investigations. Any database can store the data, but the Elastic or ELK stack provides a robust and scalable solution for managing and exploiting the scraped data.

Elasticsearch: Elasticsearch is a distributed, open-source search and analytics engine that forms the core of the ELK stack. It provides a scalable and efficient platform for storing, searching, and analyzing large volumes of structured and unstructured data.

Logstash: Logstash is a data processing pipeline that integrates with Elasticsearch to ingest, transform, and load data from various sources.

Kibana: Kibana is a powerful data visualization and exploration tool that complements Elasticsearch and Logstash in the ELK stack. It provides an intuitive web interface for querying, visualizing, and dashboarding the data stored in Elasticsearch.

Cybercrime intelligence analysts need to integrate their scraping operations with the ELK stack to leverage the Elastic stack for scraped data storage and analysis. This involves configuring the scraping tools or scripts to output the collected data in a format compatible with Logstash's input plugins, such as JSON or CSV. Once Logstash ingests the scraped data, it can be processed, enriched, and transformed using Logstash's pipeline configuration. The transformed data is then indexed in Elasticsearch, which becomes available for searching, querying, and visualization through Kibana. Kibana's Discover and Visualization tools (see Figures 4 and 5) can analyze and visualize the data using tables and charts.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/bltfcdf287556fa1597/67800a5e0475a6dfb36584be/web-scrape-004.pnghttps://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt8ff885bac8f0238e/67800a5eb17dc9afc2221752/web-scrape-005.jpgThe powerful combination of Elastic stack, with its search and analytics capabilities, enrichment features, and intuitive visualization and exploration tools, enables efficient and effective insights extraction from collected data.

CHAOTIC SPIDER, also known as "Desorden" and previously operating as "ChaosCC," is a financially motivated cybercriminal entity active since September 2021. The group specializes in data theft and extortion, focusing its efforts on enterprises in Southeast Asia, particularly in Thailand since 2022. Desorden employs SQL injection attacks to compromise web-facing servers, exfiltrating data without resorting to encryption or destruction tactics commonly seen in double-extortion schemes. Their primary activities include targeting prominent organizations such as Acer, Ranhill Utilities, and various Thai firms, with stolen data sold on cybercriminal forums like RaidForums and BreachForums (see Figure 6). The group's last known activity was recorded in October 2023, emphasizing their preference for secrecy through secure communication channels such as Tox and private messaging. Desorden’s operational focus highlights the urgent need for regional businesses to implement robust cybersecurity defenses, including multi-factor authentication, enhanced monitoring, and employee security awareness training, to mitigate potential threats.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt7e0320c6bfea90f1/67800a5f9f3ffe21551d5827/web-scrape-006.pngProfiling Desorden's activities offers critical insights into their methods and objectives. Manual profiling through cybercrime forums provides a detailed analysis of their data sales and targeted industries but is time-intensive and subject to challenges such as post deletions and forum volatility (see Figure 7).

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt7af5bedb986e7a17/67800a5fa1a6c7553362f739/web-scrape-007.pngAutomated profiling, on the other hand, leverages tools like Elasticsearch and Kibana to index and query forum data efficiently, offering enhanced visibility and historical insights into the group's operations across multiple forums. For example, Kibana allows analysts to quickly identify posts authored by Desorden, uncovering a range of high-profile victims across Southeast Asia (see Figure 8).

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt6c195506866ceb84/67800a5f66c787b9cfba2dd5/web-scrape-008.pngWhile automated systems provide scalability and operational security, they benefit from being complemented by human intelligence (HUMINT) to add context and nuance. This blended approach ensures a comprehensive understanding of threat actor behaviors, enabling organizations to stay ahead of evolving cyber threats posed by entities like CHAOTIC SPIDER.

The case study demonstrates the practical application of scraping operations in investigating data leaks on the cybercrime underground. The case study focuses on identifying and analyzing leaked credentials and sensitive information related to a specific organization.

The advancements in LLMs have opened up new possibilities for enhancing cybercrime intelligence, including scraping operations and data analysis. Some applications of LLMs in the context of scraping and analyzing data for cybercrime investigations include:

It's important to note that while LLMs offer promising applications in scraping operations, they also have limitations and potential biases. LLMs are trained on vast amounts of data and may sometimes generate irrelevant or incorrect information. Therefore, analysts play a crucial role in carefully reviewing and validating the outputs generated by LLMs to ensure accuracy and relevance, making them an integral part of the process.

Web scraping is a powerful and essential capability for cybercrime intelligence professionals, offering unmatched efficiency in gathering and analyzing data from the cybercrime underground. By leveraging tools like Python libraries, browser automation frameworks, and proxies, analysts can monitor forums, marketplaces, and communication platforms where cybercriminals operate. However, the work doesn’t stop there—understanding and countering anti-scraping mechanisms, integrating robust analysis tools like the ELK stack, and strategically managing operations are critical to success.

With the right tools and techniques, web scraping allows analysts to detect emerging threats, track malicious actors, and provide actionable insights to bolster organizational defenses.

Interested in mastering web scraping techniques and integrating them into your threat intelligence operations? The SANS FOR589: Cybercrime Intelligence course dives deep into these strategies, offering hands-on training and insights into navigating the ever-evolving cybercrime landscape. Register today or request a live demo to see how the FOR589 course can transform your approach to cyber intelligence.

Let’s take your scraping operation skills to the next level!

Apurv Singh Gautam is a Cybercrime Researcher currently working as a Sr. Threat Research Analyst at Cyble. He holds a Master of Science (MS) degree in Cybersecurity from Georgia Tech.

Read more about Apurv Singh Gautam