My thanks to Dave Kleiman (one of the original papers [8] co-author's with myself) for reviewing and adding to this series of postings.

Drive technology is set to change in the near future with patterned media (which uses a single pre-patterned large grain per bit)1. It is this type of technology, which will soon allow us to achieve "Terrabit per Square Inch" recording densities.

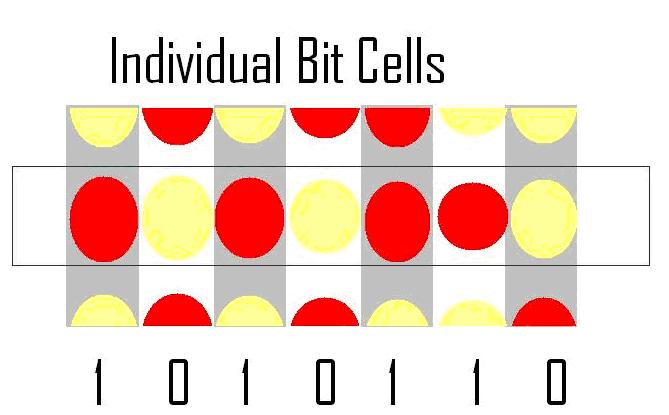

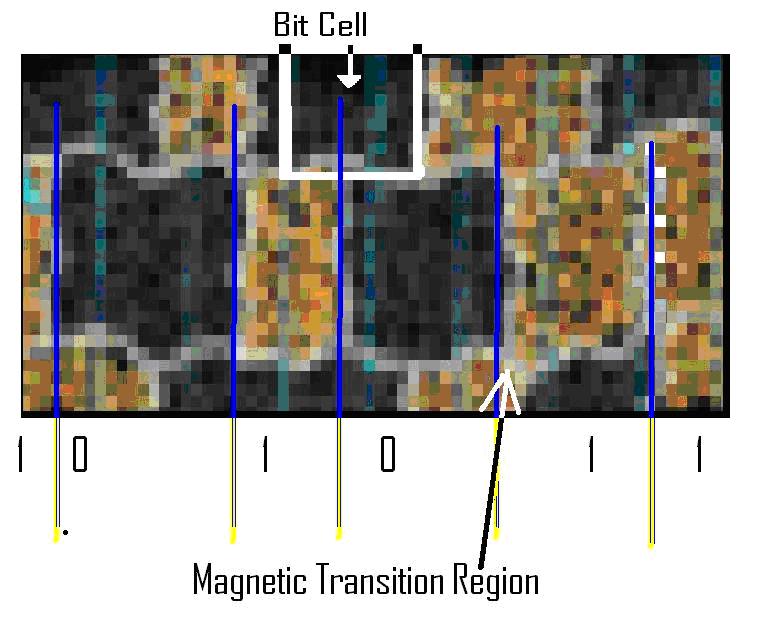

Conventional magnetic media has been made from the grouping of sub-10nm magnetic grains. These grains have traditionally been able to form an individual magnetization state within a single bit cell. This means that the polarity of the magnetization of the grains may be "left" or "right" in a well-oriented longitudinal media or oriented "up" or "down" where perpendicular media is used.

With the introduction of Patterned Media, drives will write to a single domain "magnetic island" (see Fig. 1). Each track will be in effect a single domain predefined magnetic island per bit written to the drive. This idea removes the necessity for multiple grains to be used for each bit cell. This will not only significantly increase the effective storage of a platter, but also remove the entire idea of being able to write data off track. Any data that is not focused on the single magnetic island will create an error. Consequently, there will be no arguments of data recovery from any means.

Each of these magnetic islands is comparable to the individual grains that make up the bit in existing drive technology. At present, drives use a conventional multigrain media with many small random grains per bit written to the disk platter. As can be seen in Figure 2, the individual cell bits vary as to the number of grains that they contain. The magnetic flux of each of these grains is represented by its intensity in Figure 2.

The overall size and pattern of the bit cell corresponds to the flux density and hence to the value that will be read by the drives read head. Each grain in the bit cell will vary in flux intensity. This leads to a distribution of possible values. The overlap that can occur in existing drive technology is what some people have believed to allow for a possible recovery of data from a hard drive that has been wiped.

The flaw in this argument is that bit cell sizes and cumulative flux density do not strongly correlate to the prior written value. This is, a small dense flux region that corresponds to a single bit cell that would be read as a "1" (such as the cell in figure 2 3rd from the left) does not reflect a prior value of a "0".

PRML (and ePRML)1 drives work by taking the most likely value of the data from an analogue value. If more regions of high flux density exist, the drive reads the bit cell as a "1". In the event that the bit cell is composed of grains that mostly have a low flux value, the value is read as a "0". This is displayed in the reconstructed section of track in figure 2.

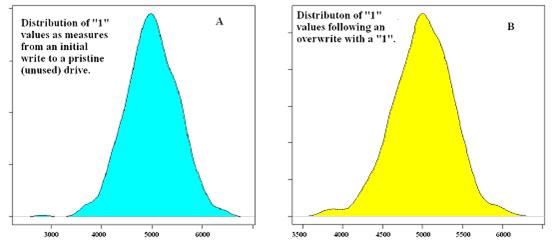

The individual strength of the bit cell can be calculated. The resulting distribution (published in [8]) is displayed in figure 3. Statistically, there is little to differentiate these distributions. Although there is some variation following the overwrite, it is not significant and does not lead to the ability to do more than guess the prior written value.

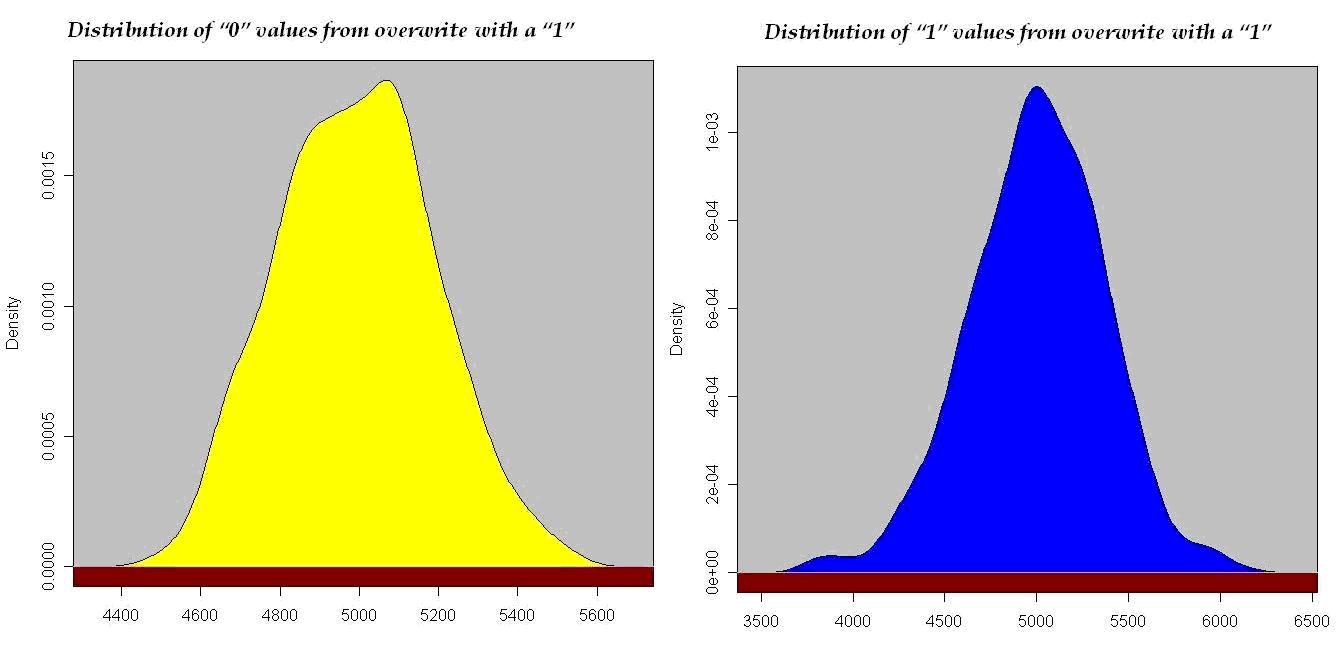

In Figure 4 we see the distribution of bits that are read as a "1". On the left is the distribution of "0" value bit cells that have been rewritten as a "1". On the right of figure 4 is the distribution of bit cells that are read as a "1" where the prior value of the bit cell also was read as a "1". We see from this distribution that there is no clear prior value.

From Figure 4, we see that there are instances that are clearly going to correlate to the extreme high flux bit cells with a prior value. Interestingly, some of the lowest density bit cells where obtained from "1" bits that had been overwritten by another "1". This is of course counter to the claim that an overwrite2 will result in a higher mean flux value for the bit cell.

This takes us back to the results of [8]. That is, the recovery data through the analysis of an overwritten bit cell will be stochastic. This means that there is an element of chance in the recovery of data from a wiped drive. The problem with this finding comes from the nature of the distributions. There is no clear correlation between the prior and subsequently written data values. This allows the distribution of the wiped value to be read independently from that of the prior value.

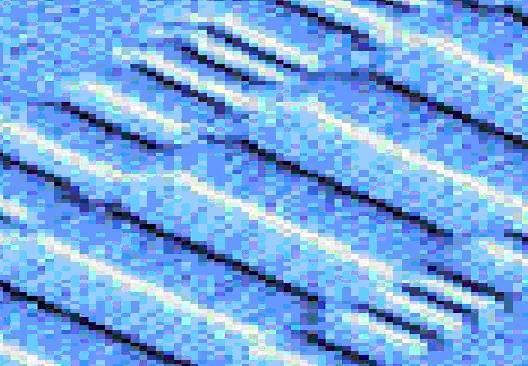

Even taking older technologies that created clear data values (see figure 5) in the tracks, reading these would not lead to a means to recover data subsequent to it being overwritten. Run length limited or RLL drives are far simpler to analyze than a PRML or ePRML drive. The size of the data tracks are many factors larger.

Figure 5 — Older drive Technology (pre-PRML) drive images offer little data

The values do not tell you what existed on the drive prior to the wipe; they just allow you to make a guess, bit by bit. Each time you guess, you compound the error. As recovering a single bit value has little if any forensic value, you soon find that the cumulative errors render any recovered data worthless.

Notes:

- PRML stands for Partial Response, Maximum Likelihood. Drives that use this technology take the statistically determined most probable value of the cell from a distribution of possible values.

- The overwritten value can occur due to either an intentional wipe of the drive or from general use. Just using a drive will result in many areas of the drive being used frequently and creating the same effect as having multiple overwrites to the bit cell. In modern high density drives, it is becoming less likely that a standard user will fill the entire drive. This leads to a mixture of high use areas (such as those areas near the MFT or master file table) and those areas that may only be written to once in the life of the drive.

References and further reading:

- Hirano T.; Fan, L.S.; Gao, J.Q. & Lee, W.Y. (1998 ) "MEMS Milliactuator For Hard-Disk-Drive Tracking Servo," Journal of Microelectromechanical Systems, 7, 149, 1998.

- Szmaja, W. (2006) "Recent developments in the imaging of magnetic domains", in: Advances in Imaging and Electron Physics, Ed. P.W. Hawkes, Vol. 141 (2006) pp. 175-256.

- Szmaja, W. (1998 ) "Digital image processing system for magnetic domain observation in SEM", J. Magn. Magn. Mater. 189 (1998 ) 353-365.

- Terris, B. D. & T. Thomson, T. (2005) "Nanofabricated and Self-assembled Magnetic Structures as Data Storage Media," J. Phys., D38, R199, 2005.

- Weller et. Al, (2000) "High K-u Materials Approach to 100 Gbits/in(2)," IEEE Trans. Magn., 36, 10, 2000.

- Weller, D. & Moser, A., (1999) "Thermal Effect Limits in Ultrahigh-Density Magnetic Recording," IEEE Trans. Magn., 35, 4423, 1999.

- Wood, R. (2000) "The Feasibility of Magnetic Recording at 1 Terabit per Square Inch," IEEE Trans. Magn., 36, 36, 2000.

- Wright, C.; Kleiman, D, & Sundhar S. R. S.: (2008 ) Overwriting Hard Drive Data: The Great Wiping Controversy. ICISS 2008: 243-257

Craig Wright, GCFA Gold #0265, is an author, auditor and forensic analyst. He has nearly 30 GIAC certifications, several post-graduate degrees and is one of a very small number of people who have successfully completed the GSE exam.