SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsPart 1 of 3

This blog is the first of a three-part series on why automation is necessary to achieve compliance in modern cloud environments. These blog posts supplement the material presented in the free webcast series:

Read Part 2 of the blog series here.

Automation has become a buzzword in the cybersecurity community. We hear about how automation improves DevOps professionals' lives, incident responders, and many other cyber professionals. Organizations have adopted agile, lean, and DevSecOps principles to speed up their infrastructure development, security, and management. This shift has been significant for the industry because we see an increased amount of attention to security in other business segments. DevSecOps and this idea of "shifting security left" have put cybersecurity at the center of attention within organizations. When I encourage an organization to adopt DevSecOps, I ask them simply to start thinking about security earlier. It is not about tools or technologies; it is a mindset to apply security principles and configurations earlier on in the lifecycle of your development and deployment of resources.

Although this increased attention and focus on security is prevalent, we have not seen the same mentality shift applied to security compliance. Unfortunately, auditors are still leveraging the same audit techniques they were taught decades ago, resulting in inaccurate technical reports, inefficient internal and external personnel use, and generally negative perception towards compliance. In this blog post, I will explore why traditional reporting and auditing no longer work and outline the philosophy auditors should adopt when automating compliance.

Before ByteChek, I worked at a national cybersecurity compliance firm focused on SOC 2, ISO 27001, HIPAA, and HITRUST examinations for several IaaS, PaaS, and SaaS providers. Early on in my audit career, I realized that manual testing such as screenshots, outdated reports, sampling point-in-time configurations of servers, etc., was not going to work in modern cloud environments. It became clear that manual testing was inefficient and did not provide enough details on the operating effectiveness of controls. As an audit professional it was virtually impossible to perform a technically accurate audit on a cloud system without automation.

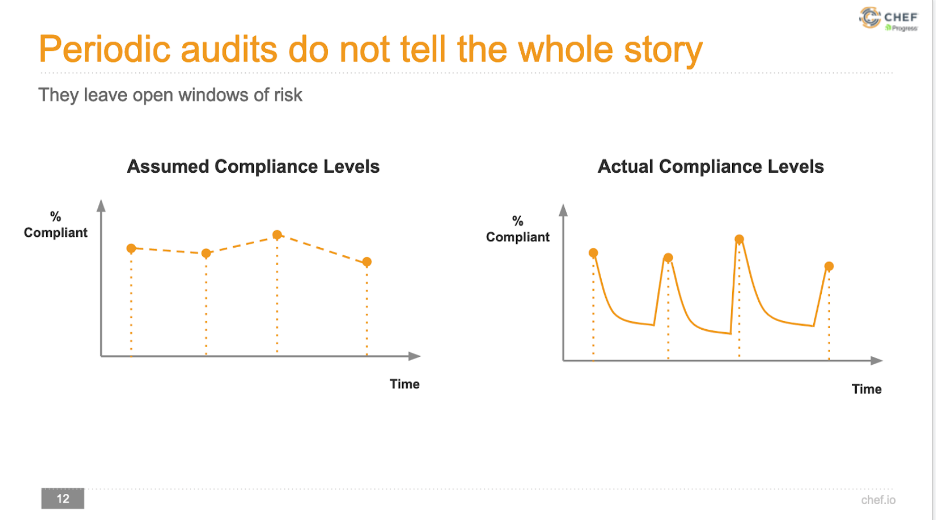

A couple of months ago, I conducted a webinar with my good friends at Chef Software. During this webinar, we discussed the future of compliance and how DevSecOps should play a role in cybersecurity compliance. The slide depicted below was one of my favorites from this webinar because it visually represents the problems with traditional audits.

Copyright Chef Software

The reports generated from a SOC 2 examination or ISO 27001 certification show that compliance was perfect during the evaluation period (typically 12 months). However, security professionals understand that actual compliance is accurately depicted on the right of the slide, where resources change constantly, mistakes are made, and things fall out of compliance. Periodic, point-in-time audits do not tell the whole story, and frequently, critical information for management to make crucial decisions is left out. Automation solves this problem.

This webcast series is not aimed to talk down on formal reporting requirements in audits. I am a former auditor and still work in the compliance space. I understand that formal reports have their place; however, most business decisions require a faster input process. I've found that near-real-time visualization is more valuable to management than a traditional reporting cycle at the tactical and strategic levels.

I focused on SOC 2 attestations in my career, and my company currently focuses on SOC 2 examinations. I believe in the SOC 2 framework and think they are valuable reports. However, SOC 2 reports are a clear example of why management can not rely on traditional reporting to understand the compliance posture of their organization. SOC 2 is a security reporting framework developed by the American Institute of Certified Public Accountants. SOC 2 is designed to give service organizations such as SaaS companies or other service providers a format to have a third-party auditor (a CPA) attest to their security practices and processes.

Organizations undergoing SOC 2 examinations can opt for a SOC 2 Type 1 and SOC 2 Type 2 report. A SOC 2 Type 2 is the most common you'll see and an audit that organizations undergo annually. This report is backward-looking, which means the auditor is evaluating controls that happened in the past. For example, an organization could have a report with a review period that runs from January 2020-December 2020. This report is used throughout 2021 to prove to customers, prospects, leaders, board members, and other interested parties that the organization has appropriate security controls in place and operating effectively. While this report is helpful to sales and does assist with growing the business, how helpful is this to management? Senior technology executives need up-to-date and near-real-time data to reduce uncertainty by providing actionable measurements promptly. Security is not a point-in-time activity, and compliance should follow suit.

In SANS SEC557: Continuous Automation for Enterprise & Cloud Compliance, we have a simple philosophy. It is the job of compliance professionals to reduce the uncertainty of management as they try to make decisions. We are not focused on achieving a clean report or the latest three-letter certification. Automating compliance helps management understand the risk in their environment and enables leaders to make impactful and effective decisions. There are a few ways we will examine how to automate compliance during this three-part webcast series, and they all come back to our overall philosophy on how to automate compliance. Join us in SEC557 for an in-depth course on how to leverage the below principles to automate compliance:

There is an abundance of information available to auditors and compliance professionals on tools already installed on the enterprise's system. This data can be easily accessed by "living off the land" and leveraging tools and technologies already in place at your organization. Using tools that your administrators already use, you can work directly with those individuals to continue to leverage those tools while producing information that will help you reduce management's uncertainty due to your compliance activities. In addition, leveraging existing tooling minimizes the effort required from both the compliance professionals and the operators responsible for collecting the evidence.

During this webcast series and in SEC557, we leverage PowerShell for our measurement scripts. We chose PowerShell for a reason. It is a cross-platform scripting language that is fully object-oriented and allows us to interact and manipulate .NET objects, which makes it pretty powerful for compliance. In addition, PowerShell natively can handle and interact with different structured data formats like CSV, XML, and JSON. The great thing for compliance professionals is if your organization is using a Windows environment, PowerShell is already there for you to use immediately. In addition, it is a cross-platform tool for other Operating Systems that administrators can install.

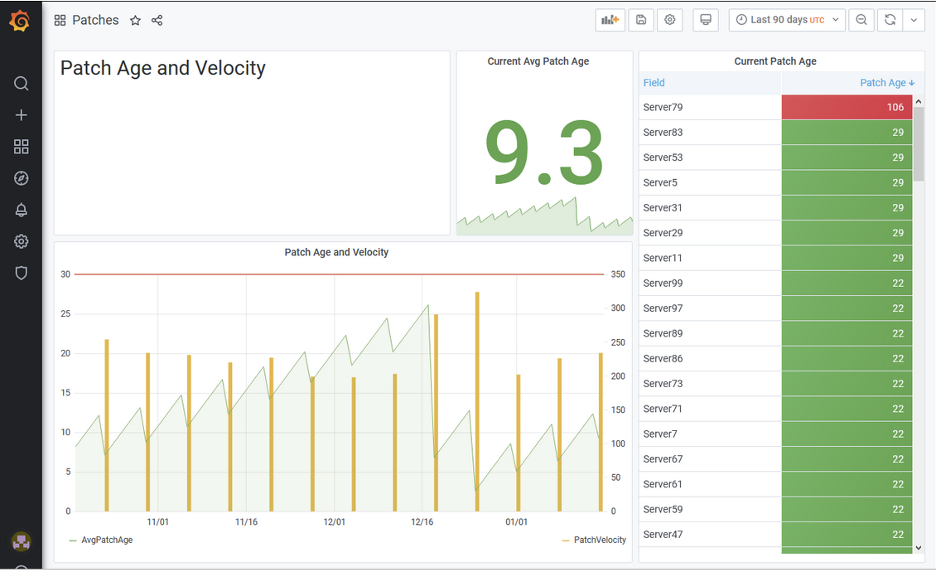

Leveraging ubiquitous tools such as PowerShell is the first step, but we must also display that information visually for management to make tactical and strategic decisions. An example of visualizing essential data that has been gathered leveraging Powershell scripts and Grafana is below:

This dashboard is one we create during SEC557. During SEC557, we build a PowerShell script that ingests a CSV that contains a year's worth of patch data for 100 servers in our environment. We interact with this data as PowerShell objects and ultimately send the data to Grafana to display visually for management. This visualization hits on a few critical concepts associated with patching that is often missed in compliance examinations that we will examine below.

The title of this dashboard is patch age and patch velocity. Patch age is the number of days that have elapsed since the last time a patch was installed on a system (s). A low patch age does not guarantee that the system is fully patched. Still, it can provide compliance professionals and management with quick data on whether or not patching activity has taken place recently.

Patch Age:

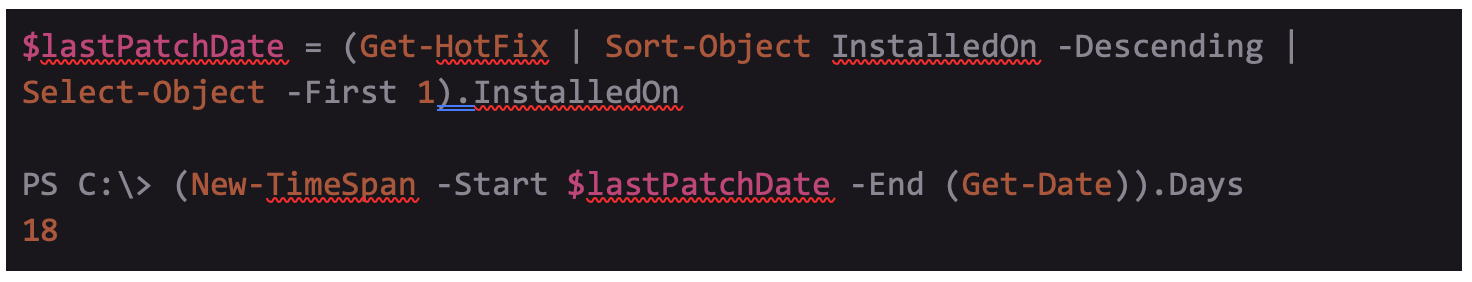

This straightforward script creates a variable that returns the date of the latest installed patch on this Windows system returned by the Get-HotFix cmdlet and then calculates the number of days between today (Get-Date cmdlet) and that date returned from the $lastPatchDate variable. During SEC557, we build a script similar that calculates the mean patch age for all 100 servers based on 12 months of patch data.

On the above Grafana graph, we see that the meantime since the organization's 100 servers were patched is just over nine days. Nine days is fair to expect in a Windows environment; anything under 30 days makes sense since Microsoft releases patches every month.

Patch velocity measures how many patches were applied on each date when the host was patched. Thus, patch velocity is a great way to assess how frequently patching is happening in the environment.

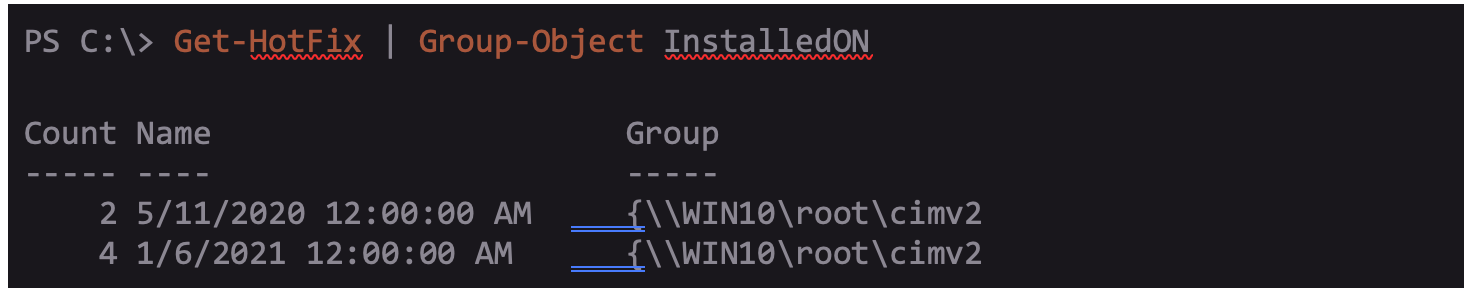

Patch Velocity:

The simple script above shows how we can run Get-HotFix and group the results by their “InstalledOn '' property. This shows me that on 5/11/2020 there were two patches installed on this system and on 1/6/2021 there were 4 patches installed on this system. During SEC557, we build a script that calculates the overall patch velocity for all 100 servers over the course of 12 months.

The above bar chart shows the average patch age over time (green area of the graph) with the number of patches installed on each date overlaid on it (yellow bars). This shows the patch velocity and helps management see the compliance history of how often patching is being performed and at what scale.

Unfortunately, what I often see in compliance assessments is not a patch velocity graph that looks like this chart. I've run into environments where no patching was performed until two weeks before I, the auditor, showed up. Without an automation script, I would not be able to see this data. Based on seeing a few sampled servers, I could falsely assume that this organization has a robust patch management strategy and not understand the details behind the evidence.

Patch age and patch velocity provide some strategic information that will be very useful to senior leaders and management when making decisions. Any CIO or CISO that sees this chart would be happy with the overall patch strategy and measurements. However, there is tactical information we can provide to system administrators as well. For example, because we used PowerShell to assess all 100 servers individually, we were able to identify an individual server (server79 in the above screenshot) that has not been patched in 106 days. This tactical information is helpful for a system administrator to investigate why this system fell out of compliance with the overall patch management program. When we leverage automation, we can gather valuable data at both the tactical and strategic levels.

Compliance and audit professionals must embrace automation to keep pace with the fast-paced nature of modern technological environments. I recommend that all professionals responsible for compliance duties of their enterprise focus on leveraging existing tools to extract data, load that data into a database, and visualize the information to help management reduce uncertainty.

AJ Yawn is currently the Director of GRC Engineering at Aquia, a digital services firm specializing in cloud infrastructure, cybersecurity, and compliance automation.

Read more about AJ Yawn