SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsIn this blog post, we discussed the acquisition of AWS CloudTrails logs stored in S3 buckets.

As an analyst or incident responder operating in a cloud environment, you are going to frequently be performing log analysis to uncover and investigate malicious activity. The challenge is that there are so many different cloud vendors and software-as-a-service (SaaS) providers and each one has several methods of extracting logs. It’s important to understand where logs are and how they can be extracted so you are prepared to respond to incidents.

This five-part blog series will cover log extraction methods for Microsoft 365, Azure, Amazon Web Services (AWS), Google Workspace, and Google Cloud. Once these logs are extracted, your tools of choice can be used to analyze their contents.

This first blog post will focus on AWS, and specifically, we are going to look at how we can download CloudTrail logs. While there are other sources of logs in AWS that may be relevant to your investigation for specific services, CloudTrail is one of the most valuable log sources available in AWS. CloudTrail records AWS API calls at the management event level, by default, and data event level, if configured. In these logs, we can observe a variety of event types, such as S3 bucket deletion, IAM user modifications, and VM creations..

CloudTrail is turned on by default and will store logs in the CloudTrail portal for 90 days. To extend the retention of the logs past 90 days, you need to configure a trail to send the logs to an S3 bucket. This is outside the scope of this blog post but instructions on how to do this can be found in the AWS CloudTrail documentation. Another point of discussion regarding CloudTrail configurations is that by default only Management events (API calls that create/delete/start or stop a service or make a call into an existing service that is running) are recorded. If you want to obtain Data events, such as API calls to retrieve/store/query S3 data, calls to Lambda functions, and Database API calls, you must create a trail and enable optional events.

We go into depth on the details of what CloudTrail logs contain and their forensic value in FOR509: Enterprise Cloud Forensics and Incident Response. Here we are specifically going to discuss the log extraction methods that can be used to obtain the logs for the purpose of analysis. Specifically, we’re going to look at retrieving them via:

Within the web console, you can see the CloudTrail logs and apply various filtering. However, this export is limited to 90 days and 50 events at a time. Given these limitations, we will focus our discussion on extracting logs stored in S3 buckets (assuming that the appropriate trail was properly configured).

You may run into a situation where CloudTrail buckets weren’t configured prior to an incident. In this situation, course author David Cowen has written a script that will leverage the API and download all available CloudTrail logs for you.

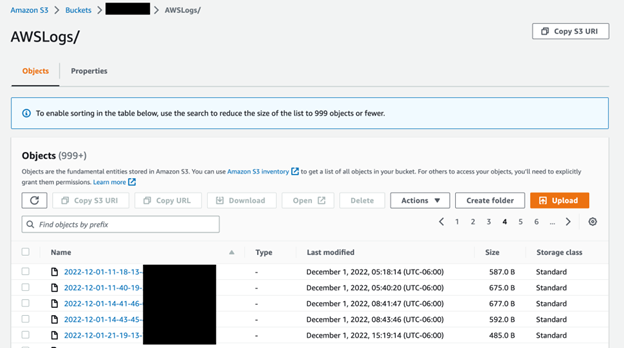

One way to obtain the logs is to simply access them via the S3 bucket in the AWS Web Console. To do so, log into the AWS console and navigate to the S3 Management Console (via the search bar or services list). From the list of buckets, select the bucket that you configured your trail to ship logs to. Inside the bucket you are going to see a list of all of the log files that have been saved with a name indicating its creation date. The below screenshot provides an example of this.

This method, however, does come with some major limitations and is only feasible in certain use cases. Unfortunately, the download action is only available for one file at a time, so each file you want would need to be individually selected and downloaded. This is not ideal when working with even dozens of files and infeasible when a bucket like the one shown above containshundreds of thousands of files. Additionally, since new files are generated multiple times per day, depending on the amount of activity, you would need to know a very specific time range of the activity you are trying to review to know which file to download.

Another limitation, as shown by the information box at the top, is that sorting is not available if there are over 999 files.. Since the default sorting is earliest to latest, when you exceed this limit, you would need to click through the pages until you reach the end to retrieve the most recent logs. Given these limitations, the preferred method for obtaining CloudTrail logs from an S3 bucket is through the CLI or API.

AWS provides a CLI interface for interacting with your resources, via a command named “aws”. The first step is to configure the prerequisites, which include IAM roles and access keys, for using AWS CLI. install the CLI via the command line you intend to use for extracting the logs. To do so, follow the installation steps provided by AWS. Lastly, you need to configure the CLI so that it’s able to specifically connect to your instance using your credentials.

With AWS CLI ready to use, we can use simple commands to download the logs we need. Here you have two possible commands you can choose from: cp or sync.

The commands operate in a very similar fashion but approach the task in slightly different ways. Let’s start by looking at the cp command. The full command line associated with copying logs out of S3 using the AWS CLI is:

aws s3 cp s3://<name of log bucket>/AWSLogs . --recursive

Executing this command will result in an output in your terminal similar to that in the screenshot below. Each file within the S3 bucket folder will be downloaded and saved locally to the specified destination folder.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt42dc03c767fdc42e/63e6b0d90841b256b996b346/Blog_2.pngHere is a breakdown of what each part of this command line means

Command/Parameter | Description |

aws | The name of the AWS CLI tool |

s3 | Specifies the operation is associated with an S3 resource |

cp | Copies a local file or S3 object to another location locally or in S3. |

s3://<name of log bucket>/AWSLogs | File source, specifically path to the S3 bucket including the bucket name and folder name. This path will vary depending on what you name your bucket when setting up the trail. |

. | The destination to copy the files to. In this case “.” represents the current working directory, but any local folder or S3 location could be used. |

--recursive | Specifies to copy the contents of all subfolders as well. |

Using this command you can replace the source and destination parameters to tailor it to your specific use case. Additional command line options for the cp command can be found in the AWS CLI documentation.

The other command capable of performing a download of logs from the S3 bucket is sync. In the case where you have not previously downloaded logs from that bucket, it will operate identically to cp. If you’ve previously downloaded logs from a bucket via the CLI, sync will only download files that have been created or updated since you last performed a sync. Additionally, sync acts recursively by default so it does not require you to specify the --recursive option. Everything else about the command operates the same:

aws s3 sync s3://<name of log bucket>/AWSLogs .

For more advanced parameters available with the sync command, see the sync AWS CLI documentation.

If you want to get around the limitations of the web console but without configuring the CLI prerequisites, multiple free software tools have been developed that provide GUI-based interfaces for exploring your S3 buckets. Provided with your credentials, these tools will use the API to display the folders and files in your S3 bucket. Additionally, many of these tools support multi-threaded downloads for accelerated transfer.

These are freeware tools from third-party providers and should be used at your own risk.

In this blog post, we discussed the acquisition of AWS CloudTrails logs stored in S3 buckets. Specifically, we provided three different methods of obtaining the logs: the web console, the CLI, and API-based tools. Regardless of the method most suited for your use case, extracting these logs will allow for a more in-depth analysis. If you want to learn more about the analysis of CloudTrail logs from a digital forensics and incident response perspective, check out FOR509: Enterprise Cloud Forensics and Incident Response.

In the next blog post in this series we will look at how to extract logs from Google Cloud, another one of the major cloud providers covered in FOR509.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt7681227400dbbb3b/64de3c584eb700227af7e9b4/FOR509_banner.png

Megan is a Senior Security Engineer at Datadog, SANS DFIR faculty, and co-author of FOR509. She holds two master’s degrees, serves as CFO of Mental Health Hackers, and is a strong advocate for hands-on cloud forensics training and mental wellness.

Read more about Megan Roddie-Fonseca