SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsAt approximately 22:50 CDT on 20101029 I responded to an event involving a user who had received an email from a friend with a link to some kid's games. The user said he tried to play the games, but that nothing happened. A few minutes later, the user saw a strange pop up message asking to send an error report about regwin.exe to Microsoft.

I opened a command prompt on the system, ran netstat and saw an established connection to a host on a different network on port 443. The process id belonged to a process named kids_games.exe.

I grabbed a copy of Mandiant's Memoryze and collected a memory image from the system and copied it to my laptop for offline analysis using Audit Viewer.

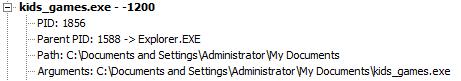

Audit Viewer gave the kids_games.exe process a very high Malware Rating Index (see Figure 1), so I decided there was probably more at play here than kids games. I took the suspect system offline and began gathering time line evidence.

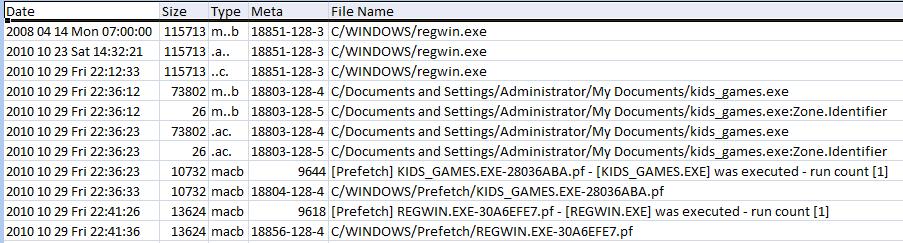

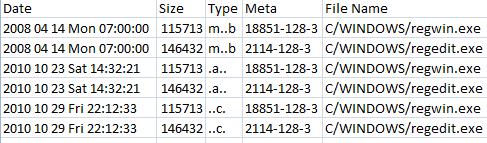

Within 20 minutes of taking the system offline, I was staring at the following timeline data (see Figure 2):

Line one of the timeline data was disconcerting. I suspected regwin.exe hadn't been created on 20080414 because this system wasn't that old. The file could have been moved onto this volume from another NTFS volume, causing the modification and born time stamps to be inherited from the original volume (see Figure 4 for time stamp update rules). Or it could have inherited these time stamps from the installation media. However, behavioral analysis of the binary showed that it was malicious, so that likely ruled out time stamp inheritance from the installation media archives.

Further, look at the Prefetch entry for regwin.exe (second entry from the bottom in Figure 2). If regwin.exe had been on this system since the date of installation, was it possible that it hadn't been run until today? It's very possible. I've also investigated systems before where binaries had been run previously, but were removed from the Prefetch. Remember there's only room for 128 entries, perhaps regwin.exe had been FIFO'd.

Given that this was an NTFS file system, I knew I could pull the time stamps from the $FILE_NAME attribute and compare them to the ones I was looking at from NTFS' $STANDARD_INFORMATION attribute. Unfortunately, getting $FILE_NAME time stamps is not as straightforward as getting $STANDARD_INFO's. However, there are tools available that can acquire them. One is David Kovar's analyzeMFT.py, Harlan Carvey has a Perl script he shared with Chris Pogue that will pull them out as well.

Those who follow my babbling on Twitter know I've been looking for a solution that would pull $FILE_NAME time stamps and put them into bodyfile format so they can be added to the overall time line for analysis. Given enough time, I could have coded up my own tool, fortunately for me, Mark McKinnon and Kovar both spent time working on a solution. Kovar got very close and given time, I'm sure he'll have a working solution in analyzeMFT if he decides it's worth pursuing.

McKinnon has a proof of concept tool called mft_parser that comes in both a GUI and a CLI version, the latter is convenient for scripting. As of this writing, mft_parser is run against the MFT from the suspect system, the syntax looks like this:

mft_parser_cl <MFT> <db> <bodyfile> <mount_point>The "db" argument is the name of a sqlite database that the tool creates, "bodyfile" is similar to the bodyfile that fls from Brian Carrier's The Sleuth Kit produces, except that it will also include time stamps from NTFS' $FILE_NAME attribute. The "mount_point" argument is prefixed to the paths in the bodyfile, so if you're running this tool against a drive image that was drive C, you can provide "C" as an argument.

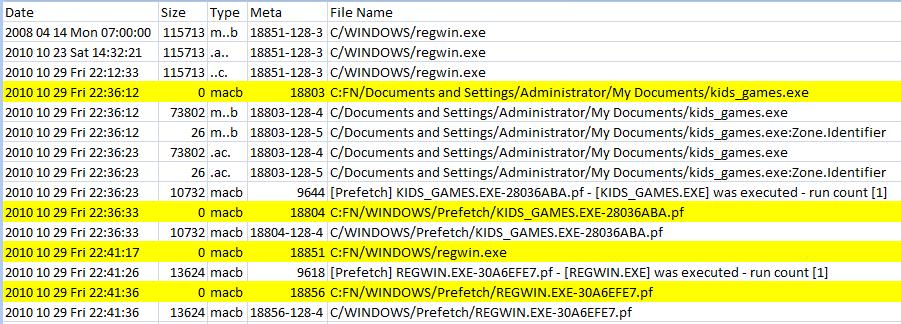

After using McKinnon's mft_parser, I recreated my timeline (see Figure 3):

I have highlighted the new information in Figure 3. Notice that McKinnon's tool labels the "$FILE_NAME" time stamps with "FN" at the beginning of the path. What do the "$FILE_NAME" time stamps tell us that the "$STANDARD_INFO" time stamps don't?

Carrier's File System Forensic Analysis book says, "Windows does not typically update this set of temporal values like it does with those in the "$STANDARD_INFORMATION" attribute, and they frequently correspond to when the file was created, moved, or renamed," (page 318).

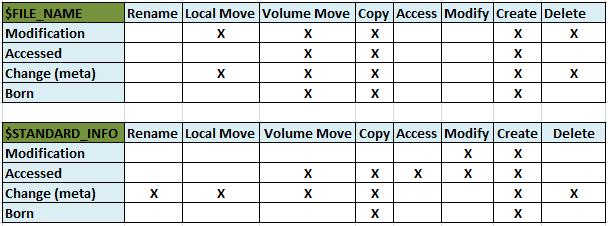

Rob Lee has done some research on time stamp modification for different types of activity performed via the GUI for both the "$STANDARD_INFORMATION" and "$FILE_NAME" attributes. I have simplified his charts in Figure 4:

Rows in the charts represent the four different types of NTFS time stamps and the columns represent different activities performed on a file via the Windows GUI. Cells containing the letter X indicate that the given time stamp will be updated for the given action. (Note that for Vista and later Access time stamps are disabled by default.)

Given these rules and the information in Figure 3, it appears that this incident has the hallmarks of time stamp manipulation. This accounts for the differences in regwin.exe's modification, born, access and metadata change times in its "$STANDARD_INFORMATION" and "$FILE_NAME" attributes. Where did the time stamps in regwin.exe's "$STANDARD_INFORMATION" attribute come from? A little careful filtering and I was able to match them up with a number of possibilities, but I suspect the attacker set them to match regedit.exe's time stamps, see Figure 5:

This seems plausible given that Vinnie Liu's timestomp, one of the anti-forensics tools built into Metasploit, provides a function to modify time stamps of one file to match those of another.

Given the available timeline evidence and the user's account of what happened, it seems likely that the kids_games executable opened a connection to an attacker's remote system. The attacker uploaded regwin.exe and executed it in an attempt to rootkit the system, the attacker then modified the time stamps for regwin.exe to match those of regedit.exe in an attempt to throw off the investigation.

The incident demonstrates the benefit of including "$FILE_NAME" time stamps in the overall timeline. Until recently, the process of gathering "$FILE_NAME" time stamps has been cumbersome. Tools like Kovar's analyzeMFT.py and now McKinnon's mft_parser are making this process easier. I still think ideally, fls should provide a flag for pulling "$FILE_NAME" time stamps into the bodyfile. I submitted a request to Carrier a few weeks ago asking for this option and didn't get a response.

Lee said he spoke to Carrier about it and that Carrier had reservations about it because fls is a tool made to work with multiple file systems and not all file systems have alternative time stamps like NTFS. My feeling is that for non-NTFS file systems, fls should warn the user if they attempt to use the flag to collect $FILE_NAME attributes. I'd love to be able to do something like the following using fls:

fls -r -m C: /dev/sda1 > std_bodyfileto collect the "$STANDARD_INFO" time stamps, then:

fls -r -m C: -f FN /dev/sda1 > fn_bodyfileto collect the "$FILE_NAME" time stamps. For now, if you want to collect "$FILE_NAME" time stamps, you'll need another tool.

For those unfamiliar with the timeline creation process that is taught in SANS Forensics 508: Computer Forensic Investigations and Incident Response, here's a quick review.

The traditional file system time stamp collection is done using fls as follows:

fls -r -m C: /dev/sda1 > fs_bodyfileTo acquire last modification times from Windows Registry keys, we use Carvey's regtime.pl against the Registry hives. The syntax is as follows:

regtime.pl -m <hive_name> -r <hive_file> > reg_bodyfileNext we use Kristinn Gudjonsson's log2timeline to collect time stamp artifacts from a variety of sources. At a minimum, I like to collect time stamps from the Windows Prefetch, lnk files and UserAssist keys. The syntax is:

log2timelines-fs<plugin>s<artifact>s-zs<time_zone>s>sartifact_bodyfile</time_zone></artifact></plugin>To collect the "$FILE_NAME" time stamps, you'll first need to collect the MFT for the system you're investigating. There are a variety of ways to do this, one is using icat from the Sleuth Kit as follows:

icat /dev/sda1 0 > MFTOnce you've got the MFT, you can use McKinnon's mft_parser, which he should be releasing more widely very soon. I'll update this post with that information once it is available. The syntax for mft_parser was explained above, but here it is:

mft_parser_cl <MFT> <db> <mft_parser_bodyfile> <mount_point>Following that, I use grep to single out the "$FILE_NAME" time stamps as follows:

grep "C:FN/" mft_parser_bodyfile > fn_bodyfileWhy do I separate the "$FILE_NAME" time stamps in mft_parser's output? Because while mft_parser does pull all time stamps, fls provides the MFT entry number, the MFT type and the MFT type id in this format (######-###-##), see column four in figures 2, 3 and 5. I prefer having this information in the time line as it can alert the analyst to the presence of alternate data streams. As of this writing, mft_parser only provides the MFT entry number in its output.

You may have noticed that in the steps above, I've stored each time stamp artifact in its own bodyfile, but we need to combine them for the next step in the process. To do this, I use something like:

cat fs_bodyfile > master_bodyfile; cat fn_bodyfile >> master_bodyfile; cat reg_bodyfile >> master_bodyfile; cat artifact_bodyfile >> master_bodyfile; ...Once I've gathered all this data into a master_bodyfile, I use mactime to turn the bodyfile into something human readable. Here's the syntax I typically use:

mactime -b master_bodyfile -d -y -m -z <time_zone> > timeline.csvObviously the "-b" flag tells mactime that the argument that follows is the input file, "-d" tells mactime to produce csv output, -y says start each line with a four digit year value, "-m" says to use numeric values for months rather than names and "-z" is obviously to supply time zone information. Getting csv output means I can pull this data into Excel, having the dates in yyyy mm dd format, means that I can easily sort and filter using dates. Excel's filtering capabilities can make analysis of a large data set relatively painless.

You can supply mactime with a date range value, but doing so may prevent you from seeing artifacts that have had their time stamps manipulated. Again, I prefer to gather more data than I need and then filter it during analysis.

Dave Hull is an incident responder and forensics practitioner in a Fortune 10000 corporation. Lately his focus has been on incident detection and malware analysis. He'll be TAing for Lenny Zeltser's Reverse Engineering Malware Course at SANS CDI in December.