SEC595: Applied Data Science and AI/Machine Learning for Cybersecurity Professionals

Experience SANS training through course previews.

Learn MoreLet us help.

Contact usBecome a member for instant access to our free resources.

Sign UpWe're here to help.

Contact UsIn this blog post, we review the methods through which we can extract logs from Google Cloud.

In the first blog post in our series on cloud log extraction, we discussed how to extract logs from AWS. Next we are going to look at extracting logs from Google Cloud.

Logs in Google Cloud are managed via their Cloud Logging service and routed to their final destination through the use of Log Sinks. By default, the _Required and _Default sinks are created and used to send the default logs to log buckets. All logs from any Google Cloud resource are sent to the logging API. The configured Log Sinks are then used to select specific subsets of those logs to be sent to one of several destinations, including Log Buckets, Storage Buckets, or Pub/Sub Topics.

In this blog post, we are going to cover three methods of extract logs:

Each method provides a quite different approach and which one is best for you will heavily depend on your use case.

Google Cloud provides the gcloud CLI for users to be able to create and manage Google Cloud resources, including logs, via the command line. In order to work with gcloud, it first needs to be installed on your system. Installation instructions can be found in Google’s documentation. After installing gcloud, you need to authenticate with your Google Cloud account. In order to work with logs, ensure you are using an account with the Private Logs Viewer permissions, otherwise you will be unable to read all details within logs. This can be done via a browser popup using the following command:

gcloud init

If you are on a system without a browser you can perform the same authentication directly in the command line by adding the following flag:

gcloud init --no-launch-browser

When you run this command, you will be prompted to select a project within your Google Cloud account. Ensure you select the project where the logs you are attempting to access are stored. After these steps are complete, you are ready to work with logs via the CLI.

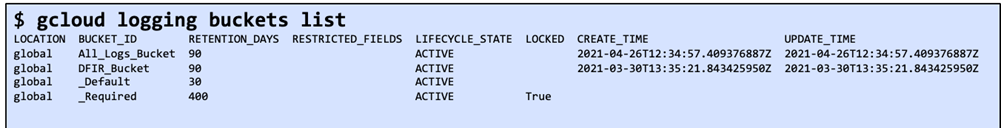

To work with logs, we will specifically use the gcloud logging commands. The first step in extracting logs is understanding which buckets you have. To do this, we run the following command and should see an associated output similar to the one below:

Next, to retrieve log entries from these buckets, we will use the gcloud logging read command. This command will accept a filter using Google’s Logging query language. Their documentation provides details on the syntax of this language. Below are a couple of examples of useful filters, but any field in the log entry could be used to filter the logs.

Filter by time range:

gcloud logging read 'timestamp<="2022-02-28T00:00:00Z" AND timestamp>="2022-01-01T00:00:00Z"'

Filter by resource type (Google Compute Engine Instance):

gcloud logging read "resource.type=gce_instance"

Filter for resource creation, deletion, and modification:

log_id("cloudaudit.googleapis.com/activity") AND protoPayload.methodName:("create" OR "delete" OR "update")

Additionally, there are numerous flags available to modify your query. A complete list of available flags, such as specifying the bucket to pull from, can be found in the CLI documentation for the google logging read command. The following are some of the key ones worth noting. By default, the output of the read command will be a human-friendly output. If you would like to view the logs outside the CLI, such as in a spreadsheet or log aggregator, you can use the --format flag followed by the format required for your needs. SOF-ELK, for example, will support ingesting these logs as long as they are outputted to JSON format.

Putting together these options, the following command will allow you to pull all logs from a project for a specific time range, output them to JSON format, and then redirect the output (using >) to a file called logs.json.

gcloud logging read 'timestamp<="2022-02-28T00:00:00Z" AND timestamp>="2022-01-01T00:00:00Z"' --format="json" > logs.json

This is probably one of the simplest ways to gather logs easily, efficiently, and in the format most useful to your use case.

Many users feel more comfortable navigating a UI rather than using the command line. If this is the case, Logs Explorer is an interface in the Google Cloud console that can be used to query and view logs. From this interface we can also download the logs in either JSON on CSV, although there is an export limit of 10,000 events. If downloaded in JSON, they can be imported into SOF-ELK and will be processed by the Google Cloud logstash parser. In order to view logs via Logs Explorer you must have an IAM role assigned with the relevant permissions. The Private Logs Viewer role mentioned in the gcloud section will provide the permissions needed for our purposes. Assuming the account you are using has the right permissions, we can search and download the logs using the following steps:

The following screenshots show the console view and download options window:

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt8fb5ad310254a4e2/640f81f826b2ac6bf80ecc3b/Google_Picture2.pnghttps://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt3fe976dea3422a9c/640f81f7ff1d0646c817135a/Google_Picture3.pngThe downloaded logs can then be imported into the application or tool of your choice for analysis.

In the Pub/Sub model, a topic is used to direct logs that need to be exported outside of Google Cloud and a subscription is used to forward the logs to their final destination. The logs can either be pushed or pulled from Pub/Sub by a third-party service.

The prerequisites for sending logs from Google Cloud Log Storage to the Pub/Sub service, and finally, to the destination are:

We will briefly review each of these steps below. More detailed information can be found in Google’s Cloud Logging documentation.

Log sink configuration is done at the project level via the Logging console: https://console.cloud.google.com/logs/router. Make sure before configuring your sink that you have the correct project selected at the top left of the page. Once in the project, select “Create Sink” from the top of the page. To configure the sink, provide the following information:

The screenshot below shows an example of the settings used for a sink created for gathering network flow logs and routing them to a Pub/Sub topic.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blted5bee2d8043f22a/640f81f7c8179210b7087794/Google_Picture4.pngThe topic can either be created via the Pub/Sub console or during the sink creation process described above. The screenshot below shows the topic creation options.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt841cb800bb7c87bc/640f81f7f5e71610843f1fbf/Google_Picture5.pngSelecting “Add a default subscription” will create a subscription associated with the topic at the same time. If a topic is created without a subscription, you can follow the steps in the next section for creating a subscription. Retention can be set for up to 31 days but additional costs will be incurred.

From the Pub/Sub console topic page, select the topic you want to configure the subscription for. Under the Subscriptions section for the topic, either select the existing subscription and select “Edit” from the subscription page or choose “Create Subscription”.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt8b9955b97184763c/640f81f78bbba310615de2d3/Google_Picture6.pngWhen creating a subscription, you must choose whether to use a push or pull delivery. With push, the messages are sent from Google Cloud to the recipient. With a pull model, the recipient queries Google Cloud to retrieve the logs. You also have options to retain the messages for a period of time after they are processed, expire the subscription if inactive, and provide a deadline for messages to be acknowledged.

To make things even easier, if you leverage Splunk or Logstash, there are pre-built integrations and detailed guidance to help you configure Pub/Sub for getting logs to those destinations:

In this blog post, we reviewed the methods through which we can extract logs from Google Cloud. gcloud CLI allows for us to directly download the log files stored in logging buckets within Google Cloud and supports the use of various parameters for filtering and formatting. Log Explorer provides the same data but as part of a graphical user interface (GUI) within the Google Cloud console and with a 10,000 event export limit. Lastly, Pub/Sub is a method through which we can push or pull logs to an external platform, such as a SIEM. In the next blog post in this series, we’ll look at how to extract Google Workspace logs from the cloud.

https://images.contentstack.io/v3/assets/blt36c2e63521272fdc/blt7681227400dbbb3b/64de3c584eb700227af7e9b4/FOR509_banner.png

Megan is a Senior Security Engineer at Datadog, SANS DFIR faculty, and co-author of FOR509. She holds two master’s degrees, serves as CFO of Mental Health Hackers, and is a strong advocate for hands-on cloud forensics training and mental wellness.

Read more about Megan Roddie-Fonseca