Tags:

When orchestrating any security awareness program, it is only natural to try and gauge an organization’s overall security position against a jury of one’s peers, so to speak; measuring success against comparable organizations and programs in the absence of industry standards. This has created a bit of a flurry over benchmarking methodologies, of late, within the phishing arena.

This initial blog will be a part of a mini-series addressing benchmarks, and considerations when utilizing external or internal benchmarks to assess your phishing program. We will address which variables to consider while planning a benchmark, and what to look out for when comparing your results to similar industries. Future blogs will cover global and internal benchmarking and concepts such as tiering.

So, what is a benchmark anyway? And how many variables need to be similar for the benchmark to be valid, or even make sense? According to Bernard Marr & Co, benchmarks are reference points that you use to compare your performance against the performance of others, typically across internal business entities or external competitors. Business processes, procedures and performance analytics are compared, considering the best practices and statistics from similar organizations.

Phishing simulations, as we know, are used by many companies, across all industries, as a key cyber training tactic teaching people to better identify and stop phishing attacks, where the adversaries use deception to gather sensitive and personal information. Phishing simulations generally measure undesired action rate (click rate), and the report rate (number of reports generated). While there are several flavors of benchmarks, phishing falls under performance benchmarking involving performance metrics, and could involve comparing against not only other like companies or competitors in similar industries, but also global comparison regardless of industry, and even internal benchmarking across your own company such as comparing different departments, regions or business units.

Most Security Awareness Officers are interested in how their phishing program metrics compare to similar businesses, but can be tempting to place too much relevance and emphasis on performance benchmarking. While identifying areas of improvement based on comparable industries is key, it is important to track multiple variables when using phishing simulations and results are a primary driver.

Some variables to consider when comparing phishing benchmarking analytical details include:

- Representative sample size of the workforce,

- History and length of the phishing program,

- The difficulty of the simulation and how many indicators are present,

- The experience of the simulation (link/attachment/credential request),

- Topic and how relative it might be to all participants,

- When it was sent (day/time based on location),

- Reporting ease/options,

- Availability and diversity of training and awareness materials,

- “Time in Band/Onboard” (for example heavy influx of New Employees might be impactful)

- Other relative demographics of the workforce

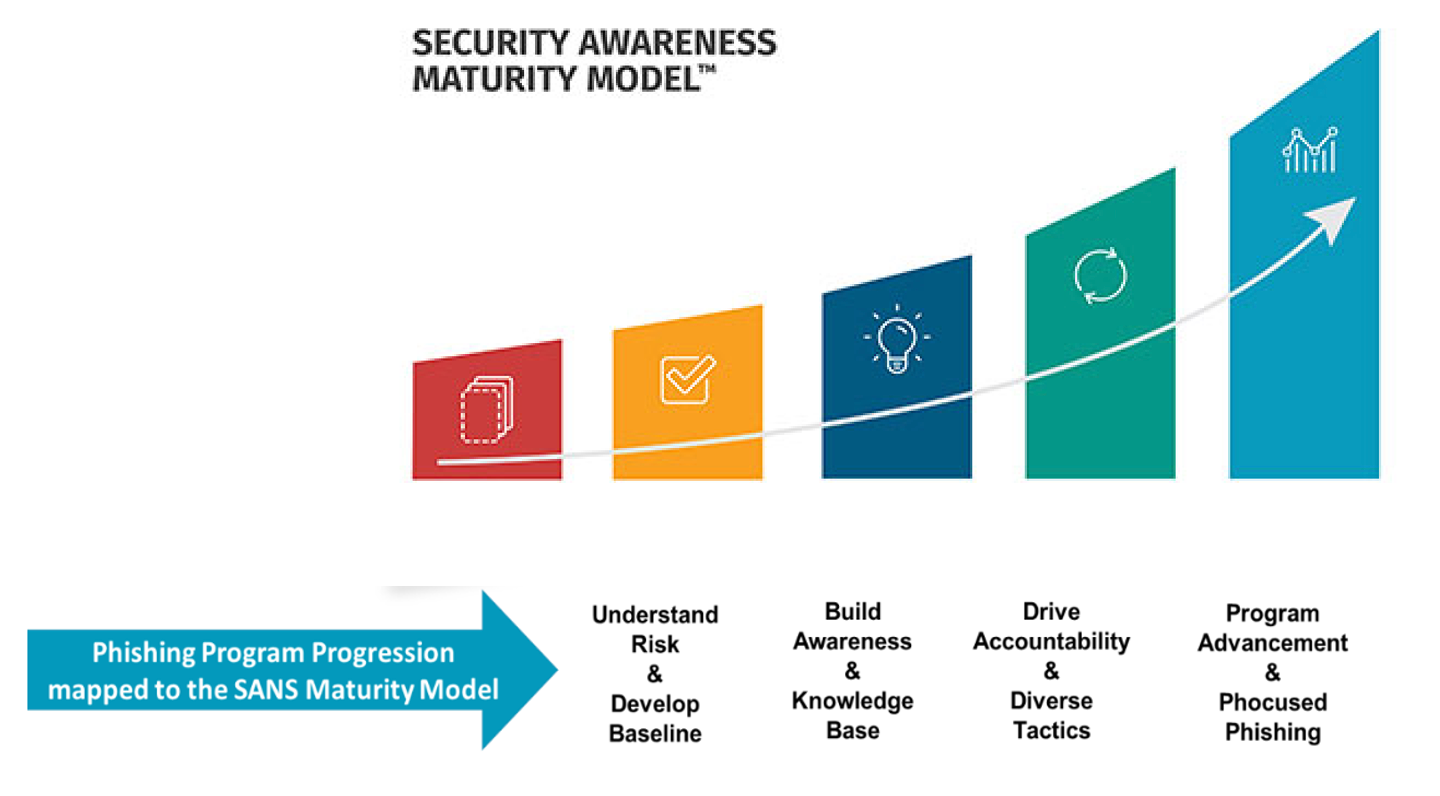

- And the overall maturity of the Security Awareness Program or effort

For example, if your phishing program lands on the higher end of the SANS Maturity Model, where you have previously promoted cyber awareness tactics, and experienced long-term sustainment and culture change, your phishing assessment results, even with a similar phishing simulation; might be very different from a similar organization whose program falls under the Compliance Focused phase. The workforce under the Compliance Focused phase have had less time to utilize teachable moments and safe cyber awareness tactics.

Another variable to consider is the difficulty of the simulations. If the simulations across each business or organization is identical, or very comparable, the statistics will be more valid. If the simulations you’re using for benchmarking vary in difficulty, the benchmark metric most likely will be skewed. This is why more mature phishing programs often use a concept called tiers, which we will cover in a future post.

So, while benchmarking has its place, and can be utilized for increased leadership support, care must be taken to ensure you are benchmarking apples to apples wherever possible. Benchmarking can only be used as a worthwhile assessment tool if you have the capacity to consider all the factors and variables. Otherwise too much emphasis on benchmark Statistics – instead of more impactful metrics such as report rate and click rate – could drive the wrong behavior.

Stay tuned for a future blog related to benchmarking within your own organization.